-

Metaphysician Undercover

14.9kApocrisis was talking about a generic force rather than a generic cause, or generic agent. I think is makes sense to speak of a general background condition that isn't happily conceived of as a cause of the events that they enable to occur (randomly, at some frequency). — Pierre-Normand

Metaphysician Undercover

14.9kApocrisis was talking about a generic force rather than a generic cause, or generic agent. I think is makes sense to speak of a general background condition that isn't happily conceived of as a cause of the events that they enable to occur (randomly, at some frequency). — Pierre-Normand

I think my criticism still holds if this is what was meant. If the particular causes cannot be identified, it is a cop-out to claim it's a "general background condition". All you are saying is that you cannot isolate the particular causes, but you know that there was something or some things within the general conditions which acted as cause. And if you make the "general background condition" into a unified entity, which acts as a cause, you have the other problem I referred to.

So, there may be events that are purely accidental and, hence, don't have a cause at all although they may be expected to arise with some definite probabilistic frequencies. Radioactive decay may be such an example. Consider also Aristotle's discussion of two friends accidentally meeting at a well. Even though each friend was caused to get there at that time (because she wanted to get water at that time, say), there need not be any cause for them to have both been there at the same time. Their meeting is a purely uncaused accident, although some background condition, such as there being only one well in the neighborhood, may have made it more likely. — Pierre-Normand

There is a clear problem with this example, and this is the result of expecting that an event has only one cause. When we allow that events have multiple causes, then each of the two friends have reasons (cause) to be where they are, and these are the causes of their chance meeting. So the event, the chance meeting, is caused, but it has multiple causes which must all come together. When we look for "the cause", in the sense of a single cause, for an event which was caused by multiple factors, we may well conclude that the event has no cause, because there is no such thing as "the cause" of the event, there is a multitude of necessary factors, causes. -

apokrisis

7.8kIf the particular causes cannot be identified, it is a cop-out to claim it's a "general background condition" — Metaphysician Undercover

apokrisis

7.8kIf the particular causes cannot be identified, it is a cop-out to claim it's a "general background condition" — Metaphysician Undercover

It is not that they can't be identified. It is that the identification would miss the causal point.

It is the inability to suppress fluctuations in general, rather than the occurrence of some fluctuation in particular, which is the contentful fact. -

Pierre-Normand

2.9kThere is a clear problem with this example, and this is the result of expecting that an event has only one cause. When we allow that events have multiple causes, then each of the two friends have reasons (cause) to be where they are, and these are the causes of their chance meeting. — Metaphysician Undercover

Pierre-Normand

2.9kThere is a clear problem with this example, and this is the result of expecting that an event has only one cause. When we allow that events have multiple causes, then each of the two friends have reasons (cause) to be where they are, and these are the causes of their chance meeting. — Metaphysician Undercover

It's true, in a sense, that 'events' have multiple causes. Recent work on the contrastive characters of causation and of explanation highlight this. But what it highlights, and what Aristotelian considerations also highlight, is somewhat obscured by the tendency in modern philosophy to individuate 'events' (and hence, also, effects) in an atomic manner as if they were local occurrences in space and in time that are what they are independently from their causes, or from the character of the agents, and of their powers, that cause them to occur. This modern tendency is encouraged by broadly Humean considerations on causation, and the metaphysical realism of modern reductionist science, and of scientific materialism.

If we don't endorse metaphysical realism, then we must acknowledge that the event consisting in the two acquaintances meeting at the well can't be identified merely with 'what happens there and then' quite appart from our interest in the non-accidental features of this event that we have specifically picked up as at topic of inquiry. Hence, the event consisting in the two individuals' meeting can't be exhaustively decomposed into two separate component events each one consisting in the arrival of one individual at the well at that specific time. The obvious trouble with this attempted decomposition is that a complete causal explanation of each one of the 'component events' might do nothing to explain the non-accidental nature of the meeting, in the case where this meeting indeed wouldn't be accidental. In the case where it is, then, one might acknowledge, following Aristotle, that the 'event' is purely an accident and doesn't have a cause under that description (that is, viewed as a meeting).

So the event, the chance meeting, is caused, but it has multiple causes which must all come together.

Well, either it's a chance encounter or it's a non-accidental meeting. Only in the later case might a cause be found that is constitutive of the event being a meeting (maybe willed by a third individual, or probabilistically caused by non-accidental features of the surrounding topography, etc.)

When we look for "the cause", in the sense of a single cause, for an event which was caused by multiple factors, we may well conclude that the event has no cause, because there is no such thing as "the cause" of the event, there is a multitude of necessary factors, causes.

Agreed. Those separate causes, though, may explain separately the different features of the so called 'event' without amounting to an explanation why the whole 'event', as such, came together, and hence fail to constitute a cause for it (let alone the cause). -

Metaphysician Undercover

14.9kIt is not that they can't be identified. It is that the identification would miss the causal point. — apokrisis

Metaphysician Undercover

14.9kIt is not that they can't be identified. It is that the identification would miss the causal point. — apokrisis

How could identifying the causes miss the causal point?

It's true, in a sense, that 'events' have multiple causes. Recent work on the contrastive characters of causation and of explanation highlight this. But what it highlights, and what Aristotelian considerations also highlight, is somewhat obscured by the tendency in modern philosophy to individuate 'events' (and hence, also, effects) in an atomic manner as if they were local occurrences in space and in time that are what they are independently from their causes, or from the character of the agents, and of their powers, that cause them to occur. This modern tendency is encouraged by broadly Humean considerations on causation, and the metaphysical realism of modern reductionist science, and of scientific materialism. — Pierre-Normand

I believe that an "event" is completely artificial, in the sense that "an event" only exists according to how it is individuated by the mind which individuates it. So the problem you refer to here is a function of this artificiality of any referred to event. It is a matter of removing something form its context, as if it could be an individual thing without being part of a larger whole.

If we don't endorse metaphysical realism, then we must acknowledge that the event consisting in the two acquaintances meeting at the well can't be identified merely with 'what happens there and then' quite appart from our interest in the non-accidental features of this event that we have specifically picked up as at topic of inquiry. Hence, the event consisting in the two individual meeting can't be exhaustively decomposed into two separate component events each one consisting in the arrival of one individual at the well at that specific time. The obvious trouble with this attempted decomposition is that a complete causal explanation of each one of the 'component events' might do nothing to explain the non-accidental nature of the meeting, in the case where this meeting indeed wouldn't be accidental. In the case where it is, then, one might acknowledge, following Aristotle, that the 'event' is purely an accident and doesn't have a cause under that description (that is, viewed as a meeting). — Pierre-Normand

So here's the issue. If we are allowed to individuate events, remove them from their context, then we may look at events as distinct, independent of their proper time and space. By doing this, we can say that any two events, are accidental, or the common term, coincidental. So the two friends meet by coincidence, but any two events when individuated and removed from context may be seen as coincidental, despite the fact that we might see them as related in a bigger context. And when we see things this way we have to ask are any events really accidental or coincidental. it might just be a function of how they are individuated and removed from context, that makes them appear this way. -

apokrisis

7.8kHow could identifying the causes miss the causal point? — Metaphysician Undercover

apokrisis

7.8kHow could identifying the causes miss the causal point? — Metaphysician Undercover

You missed the point. Read what I wrote and reply to what I wrote.

The framework is Aristotelian. Material/efficient causes are being opposed to formal/final causes. Don't pretend otherwise. -

Pierre-Normand

2.9kI believe that an "event" is completely artificial, in the sense that "an event" only exists according to how it is individuated by the mind which individuates it. So the problem you refer to here is a function of this artificiality of any referred to event. It is a matter of removing something form its context, as if it could be an individual thing without being part of a larger whole. — Metaphysician Undercover

Pierre-Normand

2.9kI believe that an "event" is completely artificial, in the sense that "an event" only exists according to how it is individuated by the mind which individuates it. So the problem you refer to here is a function of this artificiality of any referred to event. It is a matter of removing something form its context, as if it could be an individual thing without being part of a larger whole. — Metaphysician Undercover

I agree with this. I am indeed stressing the fact that the event doesn't exist -- or can't be thought of, or referred to, as the sort of event that it is -- apart from its relational properties. And paramount among those constitutive relational properties are some of the intentional features of the 'mind' who is individuating the event, in accordance with her practical and/or theoretical interests, and embodied capacities.

(...) And when we see things this way we have to ask are any events really accidental or coincidental. it might just be a function of how they are individuated and removed from context, that makes them appear this way.

Yes, indeed. The context may be lost owing to a tendency to attempt reducing it to a description of its material constituent processes that abstracts away from the relevant relational and functional properties of the event (including constitutive relations to the interests and powers of the inquirer). But the very same reductionist tendency can lead one to assume that whenever a 'composite' event appears to be a mere accident there ought to be an underlying cause of its occurrence expressible in terms of the sufficient causal conditions of the constituent material processes purportedly making up this 'event'. Such causes may be wholly irrelevant to the explanation of the occurrence of the composite 'event', suitable described as the purported "meeting" of two human beings at a well, for instance. -

TheMadFool

13.8kI understand it's a statisical(?) argument. If a,b,c...x,y,z could cause an event then it doesn't matter which of them actually causes it since the event is inevitable.

Does this argument work for crime where responsibility for actions is a cornerstone? -

Metaphysician Undercover

14.9kYou missed the point. Read what I wrote and reply to what I wrote. — apokrisis

Metaphysician Undercover

14.9kYou missed the point. Read what I wrote and reply to what I wrote. — apokrisis

To tell you the truth, I saw your statement as irrelevant, and bordering on meaningless gibberish.

It is the inability to suppress fluctuations in general, rather than the occurrence of some fluctuation in particular, which is the contentful fact. — apokrisis

We're discussing physical causes in inanimate objects. The "inability to suppress fluctuations", is a given, a background condition. Newton's law of inertia is not stated as a body which has the "ability to suppress fluctuations" will remain in an inertial state. It is assumed that the inanimate body has the "inability to suppress fluctuations". There is no such thing in physics as the capacity to resist potential causes (ability to suppress fluctuations), that would be an overriding supernatural power which would turn physics into nonsense.

Clearly, it is the existence of particular fluctuations which are of interest to physicists, not an inability to suppress fluctuations in general, which implies the existence of a supernatural capacity to suppress particular fluctuations in the first place. -

apokrisis

7.8kMy OP was explicitly directed at the issue of spontaneous symmetry breaking where the situation is so unstable that any old accident is going to produce the same inevitable effect. So it would only apply to crime to the degree there was some similar causality in play.

apokrisis

7.8kMy OP was explicitly directed at the issue of spontaneous symmetry breaking where the situation is so unstable that any old accident is going to produce the same inevitable effect. So it would only apply to crime to the degree there was some similar causality in play.

However, because I am expressing a general constraints-based view of causality, you could say that responsibility is about limiting antisocial behaviour to some point where a community becomes indifferent to what you are doing.

If you wear your socks inside out, that doesn’t really matter, regardless of whether the act is accidental or deliberate. But if you bump into someone in the street and hurt them bad, then the difference would tend to matter.

My OP wasn’t ruling out the idea of deliberate action. It was focused on the causation of accidents in unstable situations. -

LD Saunders

312Apokrisis: Have you studied physics? Where you had to solve actual quantitative problems in mechanics? I'm just not sure what you are trying to argue here, and decades ago I earned a degree in physics.

LD Saunders

312Apokrisis: Have you studied physics? Where you had to solve actual quantitative problems in mechanics? I'm just not sure what you are trying to argue here, and decades ago I earned a degree in physics. -

apokrisis

7.8kSo did you study the physics of spontaneous symmetry breaking? Did you get to the bottom of the buckling beam problem and other examples of bifurcation?

apokrisis

7.8kSo did you study the physics of spontaneous symmetry breaking? Did you get to the bottom of the buckling beam problem and other examples of bifurcation?

Cheeky bastard. -

apokrisis

7.8kIf the particle is perfectly balanced on top of the dome, then there it shall remain until some net force moves it. — LD Saunders

apokrisis

7.8kIf the particle is perfectly balanced on top of the dome, then there it shall remain until some net force moves it. — LD Saunders

This was your original point. And note how it relies on a notion of "net force". So the Newtonian view already incorporates the kind of holism I'm talking about.

A system in balance by definition has merely zeroed the effective forces being imposed on it by its total environment. The Newtonian formalism doesn't specify an absence of imposed forces. It just says that any fluctuations present are balanced to a degree that no particular acceleration is making a difference.

So the point about a ball balanced on a dome is you can see this is an unstable situation. There is a strong accelerative force acting on the ball - gravity. The situation is very tippable. The slightest fluctuation will lead to a runaway change. Down the ball will roll.

It now matters rather a lot whether the "net force" describes a literal absence of any further environmentally imposed force, or whether it represents a state where the fluctuations are coming from all directions and somehow - pretty magically - cancel themselves out to zero ... until the end of time.

So that was my point. The conventional view of causality likes to treat reality as a void. Nominalism rules. All actions are brutely particular. But conversely, reality can be seen as a warm bath of fluctuations. And now the kind of simple causality we associate with an orderly world has to be an emergent effect. It arises to the degree that fluctuations are mostly suppressed or ignored. It relies on a system having gone to the stability of a thermodynamic equilibrium.

But if fluctuations are only being suppressed to give us our "fluctuation-free" picture of causality, then that means they still remain. That then becomes a useful physical fact to know. It becomes a way of modelling the physics of instabilities or bifurcations.

And metaphysically, it says instability is fundamental to nature, stability is emergent at best. And that flips any fundamental question. Instead of focusing on what could cause a change, deep explanations would want to focus on what could prevent a change. Change is what happens until constraints arise to prevent it.

So this is how holism becomes opposed to reductionism. It is the different way of thinking that moves us from a metaphysics of existence to a metaphysics of process. -

Metaphysician Undercover

14.9kBut the very same reductionist tendency can lead one to assume that whenever a 'composite' event appears to be a mere accident there ought to be an underlying cause of its occurrence expressible in terms of the sufficient causal conditions of the constituent material processes purportedly making up this 'event'. Such causes may be wholly irrelevant to the explanation of the occurrence of the composite 'event', suitable described as the purported "meeting" of two human beings at a well, for instance. — Pierre-Normand

Metaphysician Undercover

14.9kBut the very same reductionist tendency can lead one to assume that whenever a 'composite' event appears to be a mere accident there ought to be an underlying cause of its occurrence expressible in terms of the sufficient causal conditions of the constituent material processes purportedly making up this 'event'. Such causes may be wholly irrelevant to the explanation of the occurrence of the composite 'event', suitable described as the purported "meeting" of two human beings at a well, for instance. — Pierre-Normand

It is when we allow for the ideas, thoughts, and intentions of the individuals, that the causal waters are muddied. It is only because the two people know each other, and recognize each other, that their chance meeting at the well is a significant event. Otherwise it would be two random people meeting at the well, and an insignificant event.

This is what also happens when Aristotle explains the causal significance of chance and fortune. Chance is a cause, in relation to fortune, so when the chance meeting is of a person who owes the other money, and the debt is collected, the chance occurrences causes good fortune. Likewise you can see that in a lottery draw a chance occurrence is the cause of good fortune. And some chance occurrences such as accidents, cause bad fortune. But "fortune" only exists in relation to the well-being of an individual So it's only within this framework of determine things as good or bad, that we say chance is a cause. The event is "caused" by whatever things naturally lead to it, but that it caused fortune is in relation to the well-being of individuals.

You seem to be headed off on some tangent and I have no idea what your trying to say. The uncertainty principle is a reflection of the Fourier transform which describes uncertainty in the relationship between time and wave frequency.

And metaphysically, it says instability is fundamental to nature, stability is emergent at best. And that flips any fundamental question. Instead of focusing on what could cause a change, deep explanations would want to focus on what could prevent a change. Change is what happens until constraints arise to prevent it. — apokrisis

This is a false conclusion though. The ball has to get there, to its position on the top of the dome. This requires stability. Stability is your stated premise, the ball is there, it has the capacity, by its inertial mass, to suppress fluctuations, therefore it has a position. You cannot turn around now and say that the ball rolls because of its incapacity to suppress fluctuations, and therefore instability is fundamental, without excepting contradictory premises. Stability is fundamental and instability is fundamental. -

apokrisis

7.8kStability is fundamental and instability is fundamental. — Metaphysician Undercover

apokrisis

7.8kStability is fundamental and instability is fundamental. — Metaphysician Undercover

Yep. So one is the view I would be arguing for, the other would be the one I would be arguing against. Stability was not my stated premise. It was the premise which I challenged. -

TheMadFool

13.8kMy OP wasn’t ruling out the idea of deliberate action. It was focused on the causation of accidents in unstable situations. — apokrisis

I think you have a point.

Consider an event A. In terms of necessary causes we can field events x and y. These events (x and y) set the stage for A. Now comes along event z which is the sufficient cause and results in actualization of event A.

So, if I understand you, all events (x, y and z) are important. The instability due to x and y is causally important in our equation.

Also, there could be another event w causally equivalent to z that could've taken advantage of the unstable situation resulting from the conjunction of necessary causes x and y.

In such cases we could say that the event A is inevitable. -

LD Saunders

312Apokrisis: You are simply talking nonsense. I'll stick with real physics, and leave you to your absurdities.

LD Saunders

312Apokrisis: You are simply talking nonsense. I'll stick with real physics, and leave you to your absurdities. -

SophistiCat

2.4kIn Determinism: what we have learned and what we still don't know (2005) John Earman "survey[ s] the implications of the theories of modern physics for the doctrine of determinism" (see also Earman 2007 and 2008 for a more technical analysis). When it comes to Newtonian mechanics he gives several indeterministic scenarios that are permitted under the theory, including the Norton's dome of the OP. Another well-known example is that of the "space invaders":

SophistiCat

2.4kIn Determinism: what we have learned and what we still don't know (2005) John Earman "survey[ s] the implications of the theories of modern physics for the doctrine of determinism" (see also Earman 2007 and 2008 for a more technical analysis). When it comes to Newtonian mechanics he gives several indeterministic scenarios that are permitted under the theory, including the Norton's dome of the OP. Another well-known example is that of the "space invaders":

Certain configurations of as few as 5 gravitating, non-colliding point particles can lead to one particle accelerating without bound, acquiring an infinite speed in finite time. The time-reverse of this scenario implies that a particle can just appear out of nowhere, its appearance not entailed by a preceding state of the world, thus violating determinism.

A number of such determinism-violating scenarios for Newtonian particles have been discovered, though most of them involve infinite speeds, infinitely deep gravitational wells of point masses, contrived force fields, and other physically contentious premises.

Norton's scenario is interesting in that it presents an intuitively plausible setup that does not involve the sort of singularities, infinities or supertasks that would be relatively easy to dismiss as unphysical. has already homed in on one suspect feature of the setup, which is the non-smooth, non-analytic shape of the surface and the displacement path of the ball. Alexandre Korolev in Indeterminism, Asymptotic Reasoning, and Time Irreversibility in Classical Physics (2006) identifies a weaker geometric constraint than that of smoothness or analyticity, which is Lipschitz continuity:

A function is called Lipschitz continuous if there exists a positive real constant such that, for all real and , . A Lipschitz-continuous function is continuous, but not necessarily smooth. Intuitively, the Lipschitz condition puts a finite limit on the function's rate of change.

Korolev shows that violations of Lipschitz continuity lead to branching solutions not only in the case of the Norton's dome, but in other scenarios as well, and in the same spirit as Andrew above, he proposes that Lipschitz condition should be considered a constitutive feature of classical mechanics in order to avoid, as he puts it, "physically impossible solutions that have no serious metaphysical import."

Ironically, as Samuel Fletcher notes in What Counts as a Newtonian System? The View from Norton’s Dome (2011), Korolev's own example of non-Lipschitz velocities in fluid dynamics is instrumental to productive research in turbulence modeling, "one whose practitioners would be loathe to abandon on account of Norton’s dome."

It seems to me that Earman oversells his point when he writes that "the fault modes of determinism in classical physics are so numerous and various as to make determinism in this context seem rather surprising." I like Fletcher's philosophical analysis, whose major point is that there is no uniquely correct formulation of classical mechanics, and that different formulations are "appropriate and useful for different purposes:"

As soon as one specifies which class of mathematical models one refers to by “classical mechanics,” one can unambiguously formulate and perhaps answer the question of determinism as a precise mathematical statement. But, I emphasize, there is no a priori reason to choose a sole one among these. In practice, the choice of a particular formulation of classical mechanics will depend largely on pragmatic factors like what one is trying to do with the theory. — Fletcher -

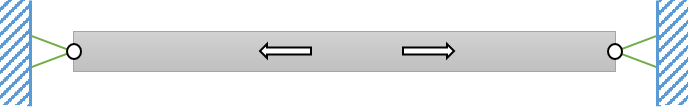

andrewk

2.1kI'm interested in what people think of the static problem on p21-22, of a beam supported at each end with a load in the middle. Norton says the limit case of a perfectly stiff beam is not consistent with the limit as stiffness approaches infinity of the cases with elastic beams. For finite stiffness the equation for the horizontal forces of the beam ends has a unique solution but for the infinitely stiff case it does not.

andrewk

2.1kI'm interested in what people think of the static problem on p21-22, of a beam supported at each end with a load in the middle. Norton says the limit case of a perfectly stiff beam is not consistent with the limit as stiffness approaches infinity of the cases with elastic beams. For finite stiffness the equation for the horizontal forces of the beam ends has a unique solution but for the infinitely stiff case it does not.

Maybe I'm misunderstanding, but I am not persuaded by this. The limit of the horizontal forces as the beam stiffness approaches infinity is zero and, that is the value that a first principles analysis of the infinitely stiff case gives us too. The load pushes downwards with force W on the beam and the ends of the beam push down on the supports with weight W/2 at each end. There is nothing in the system supplying any horizontal forces, so the horizontal forces are zero, which is equal to the limit of the finitely stiff cases.

What the lack of a unique solution to the equations for the infinitely stiff case tells us is that, in addition to providing vertical support, the supports could also push inwards against the beam or pull outwards on it, with any force at all, and the system would still remain static. That is not surprising, given the beam is infinitely stiff and hence infinitely resistant to both tension and compression. But in the absence of the system containing any elements that supply such lateral forces, the lateral force must be zero.

So I don't think this does supply the example Norton wants of a system where the behaviour in the limit is not equal to the limit of the behaviours close to the limit - which is what happens with the dome.

Not relevant to the problem, but I would have thought that, if we allow the application of lateral forces by the side supports different to the resistance to the natural lateral pull from the weight of the beam, then even in the finite elasticity cases those lateral forces can range over an interval. The more strongly they pull (push) the beam ends outwards (inwards), the less (more) the beam will sag. SO I'm not sure I can see any qualitative difference or discontinuity between the infinite and finite stiffness cases.

If that's right, then the only concrete example used to argue against the solution that suggests we should rule out the dome as an inadmissable idealisation because of the infinite curvature at the top, has failed. All that is left to argue against that solution is the second last paragraph on p21 that begins with 'It does not.' But I found that para rather a vague word salad and didn't feel that it contained any strong points. Indeed I'm not sure I understood what point he was trying to make in it. Perhaps somebody could help me with that.

I'm interested in the thoughts of others, whether they agree with Norton's or my analysis of the beam example, and whether the argument against ruling out inadmissible idealisations can stand without it. -

SophistiCat

2.4kKorolev actually does a similar limiting analysis of the dome itself, showing that for any finite elasticity (assuming a perfectly elastic material of the dome) there is a unique solution for a mass at the apex: the mass remains stationary; but when the elasticity coefficient is taken to infinity, i.e. the dome becomes perfectly rigid, the situation changes catastrophically and all of a sudden there is no longer a unique solution.

SophistiCat

2.4kKorolev actually does a similar limiting analysis of the dome itself, showing that for any finite elasticity (assuming a perfectly elastic material of the dome) there is a unique solution for a mass at the apex: the mass remains stationary; but when the elasticity coefficient is taken to infinity, i.e. the dome becomes perfectly rigid, the situation changes catastrophically and all of a sudden there is no longer a unique solution.

In the case of the beam in Norton's paper, in order to definitely answer the question we need to find the state of stress inside the beam, which for normal bodies is fixed by boundary conditions. The problem with infinite stiffness is that constitutive equations are singular, and therefore, formally at least, the same boundary conditions are consistent with any number of stress distributions in the beam. This happens for the same reason that division by zero is undefined: any solution fits. In the simple uniaxial case, the strain is related to the stress by the equation . When E is infinite and 1/E is zero, the strain is zero and any stress is a valid solution to the equation. Boundary conditions in an extended rigid body will fix (some of the) stresses at the boundary, but not anywhere else. Or so it would seem.

However, what meaning do stresses have in an infinitely stiff body? Because there can be no displacements, there is no action. Stresses are meaningless. Suppose that instead of perfectly rigid and stationary supports, the rigid beam in Norton's paper was suspended between elastic walls. Whereas in the original problem the entire system, including the supports, was infinitely stiff and admitted no displacements, now elastic walls would experience the action of the forces exerted by the ends of the beam. Displacements would occur, energy would be expended, work would be done. The problem becomes physical, and physics requires energy conservation, which immediately yields the solution to the problem.

So I wouldn't worry too much about these singular limits; just as in the case of the division by zero, no solution makes more sense than any other, they are all meaningless. -

Pierre-Normand

2.9kSo I wouldn't worry too much about these singular limits; just as in the case of the division by zero, no solution makes more sense than any other, they are all meaningless. — SophistiCat

Pierre-Normand

2.9kSo I wouldn't worry too much about these singular limits; just as in the case of the division by zero, no solution makes more sense than any other, they are all meaningless. — SophistiCat

You mean, of course, the division of zero by zero. (Very good recent exchange between you and andrewk, by the way! I'll comment shortly.) -

Pierre-Normand

2.9k@SophistiCat, @andrewk,

Pierre-Normand

2.9k@SophistiCat, @andrewk,

I was quite startled when I read the case of the beam, described by Norton as a "statically indeterminate structure", because just a few days earlier I had been struck by a similar real world case. I was startled yet again today when I say you two insightfully discussing it.

A friend of mine went to Cuba with his wife and they asked me to feed their two cats while they were away. They live on my home street, a mere five minutes walk away. As I was walking there, I noticed the street sign, with the name of our street on it, being rotated 45° from its normal horizontal position. The sign is screwed inside of an iron frame, and the frame was secured to the wooden pole in its middle position, while another screw, at the top, had come loose and thus enabled the sign to rotate around its (slightly off center) horizontal axis. (I guess I'll go back there during the day and take a picture). A few weeks ago there had been a storm in our area, with abnormally heavy winds, which knocked the power out for several hours. Those heavy winds may have been the cause.

But then, as I wondered what kind of force might have cause the top screw to come loose, I also wondered how any torque might have been applied on the middle screw prior to the top screw giving way (or, at least, beginning to loosen up). I assumed the middle screw not to have been centered on the horizontal axis of the sign, or else there would have been no torque from the wind. But then I reasoned (while simultaneously realizing that it made no sense!) that, on the one hand, there couldn't be any horizontal force on the top screw without there being a torque on the middle screw, but also, on the second hand, that there could not be any torque on the middle screw without there being an initial horizontal displacement of at least one of the two screws! So, on the condition that the whole system would be perfectly rigid, and treated as a problem of pure statics, there appears to be no possibility for either a torque being applied on the middle screw, or an equal and opposite lever reaction force being applied on the top screw, without one of those two forces being enabled to produce (dynamically, not merely statically) an initial action, or reaction, just as SophistiCat described regarding Norton's horizontal beam case. And such an initial dynamical action only is possible on the condition that the system not be ideally rigid. And then, of course, as SophistiCat astutely concluded (and I didn't concluded at the time) the ideal case might be inderminate because of the different ways in which the limiting case of a perfectly rigid body could be approached. (I had later arrived at a similar conclusion, regarding Norton's dome, while Norton himself, apparently, didn't. But I'll comment on this shortly). -

Pierre-Normand

2.9kIf that's right, then the only concrete example used to argue against the solution that suggests we should rule out the dome as an inadmissable idealisation because of the infinite curvature at the top, has failed. All that is left to argue against that solution is the second last paragraph on p21 that begins with 'It does not.' But I found that para rather a vague word salad and didn't feel that it contained any strong points. Indeed I'm not sure I understood what point he was trying to make in it. Perhaps somebody could help me with that. — andrewk

Pierre-Normand

2.9kIf that's right, then the only concrete example used to argue against the solution that suggests we should rule out the dome as an inadmissable idealisation because of the infinite curvature at the top, has failed. All that is left to argue against that solution is the second last paragraph on p21 that begins with 'It does not.' But I found that para rather a vague word salad and didn't feel that it contained any strong points. Indeed I'm not sure I understood what point he was trying to make in it. Perhaps somebody could help me with that. — andrewk

I had also highlighted this paragraph from Norton's paper in orange, which is the color that I use to single out arguments that appear incorrect to me. In the margin, I had written "idem", referring back to my comment about the previous paragraph. On the margin of the previous paragraph, I had commented: "That may be because the limit wasn't approached correctly. A more revealing way to approach the limit would be to hold constant the shape of the dome (with infinite curvature at the apex, or close to it) and send balls sliding up with a small error spread around the apex. The limit would be taken where the error spread is being reduced."

Alternatively, we can proceed in the way @SophistiCat discussed, and allow the dome to have some elasticity. There will be an area near the apex where the mass is allowed to sink in and remains stuck. When we approach the limit of perfect rigidity, the sensitivity to the initial placement of the mass in the vicinity of the apex increases without limit and we get to the bifurcation point in the phase space representation of this ideal system. The evolution is indeterminate because, through going to the ideal limit, we have hidden some feature of the dynamics and turned the problem into a problem of pure statics (comparable to the illegitimate idealization of the beam pseudo-problem, which was aptly analogized by SophistiCat as the fallacious attempt to determine the true value of 0/0 with no concern for the specific way in which this ideal limit is being approached). -

Pierre-Normand

2.9kBut then I reasoned (while simultaneously realizing that it made no sense!) that, on the one hand, there couldn't be any horizontal force on the top screw without there being a torque on the middle screw (...) — Pierre-Normand

Pierre-Normand

2.9kBut then I reasoned (while simultaneously realizing that it made no sense!) that, on the one hand, there couldn't be any horizontal force on the top screw without there being a torque on the middle screw (...) — Pierre-Normand

Maybe I should clarify my reasoning a bit here. Since the frame of the sign is being held by two screws, the force of the wind on the sign will be opposed by an equal reaction force distributed on the two screws. (I am ignoring the torque around the vertical axis, which is not relevant here). But my main point is that the force being applied on either screw doesn't result in any torque (around the horizontal axis) being applied on the other screw unless the frame, working as a lever, is allowed of rotate a little bit. And this can't happen prior to one of the screws, at least, beginning to loosen up. -

SophistiCat

2.4kAnd then, of course, as SophistiCat astutely concluded (and I didn't concluded at the time) the ideal case might be inderminate because of the different ways in which the limiting case of a perfectly rigid body could be approached. — Pierre-Normand

SophistiCat

2.4kAnd then, of course, as SophistiCat astutely concluded (and I didn't concluded at the time) the ideal case might be inderminate because of the different ways in which the limiting case of a perfectly rigid body could be approached. — Pierre-Normand

Thanks, but I cannot take credit for what I didn't actually say :) The rigid beam case is indeterminate in the sense that multiple solutions are consistent with the given conditions. I did think that there may be a way to approach a different solution (with non-zero lateral forces) by an alternative path of idealization, perhaps by varying something other than elasticity. My intuition was primed by the 0/0 analogy, in which a parallel strategy of approaching the limit from some unproblematic starting point would clearly be fallacious. But I couldn't think of anything at the moment, so I didn't mention it.

I think I have such an example now though.

Suppose that a pair of lateral forces of equal magnitude but opposite directions were applied to the beam. This would make no difference to the original rigid beam/wall system: the forces would balance each other, thus ensuring equilibrium, and everything else would be the same, since the forces wouldn't produce any strains in the beam; the forces would thus be merely imaginary, since they wouldn't make any physical difference. However, if we were to approach the rigid limit by starting from a finite elasticity coefficient and then taking it to to infinity, there would be a finite lateral force acting on the walls all the way to the limit. -

SophistiCat

2.4kIn The Norton Dome and the Nineteenth Century Foundations of Determinism, 2014 (PDF) Marij van Strien takes a look at how 19th century mathematicians and physicists confronted instances of indeterminism in classical mechanics*. She notes that the fact that differential equations of motion sometimes lacked unique solutions was known pretty much for as long as differential equations were studied, since the beginning of the 19th century. Notably, in 1870s Joseph Boussinesq studied a parameterized dome setup, of which what is now known as the Norton's Dome is a special case. Although Boussinesq did not solve the equations of motion for the dome, he studied their properties, and noting the emergence of singularities for some combinations of parameters he concluded that the equations must lack a unique solution in those instances (this can also be seen by noting that the apex of the dome does not satisfy the Lipschitz condition in those configurations - see above.)

SophistiCat

2.4kIn The Norton Dome and the Nineteenth Century Foundations of Determinism, 2014 (PDF) Marij van Strien takes a look at how 19th century mathematicians and physicists confronted instances of indeterminism in classical mechanics*. She notes that the fact that differential equations of motion sometimes lacked unique solutions was known pretty much for as long as differential equations were studied, since the beginning of the 19th century. Notably, in 1870s Joseph Boussinesq studied a parameterized dome setup, of which what is now known as the Norton's Dome is a special case. Although Boussinesq did not solve the equations of motion for the dome, he studied their properties, and noting the emergence of singularities for some combinations of parameters he concluded that the equations must lack a unique solution in those instances (this can also be seen by noting that the apex of the dome does not satisfy the Lipschitz condition in those configurations - see above.)

* A less technical work by the same author is Vital Instability: Life and Free Will in Physics and Physiology, 1860–1880, 2015 (PDF).

Van Strien writes that "nineteenth century determinism was primarily taken to be a presupposition of theories in physics." Boussinesq was something of an exception in that he took the nonunique solutions that he and others discovered seriously. (He acknowledged that his dome was not a realistic example, taking it more as a proof-of-concept; rather, he thought that actual indeterminism would be found in some hypothetical force fields produced by interactions between atoms, which he showed to be mathematically similar to the dome equations.) Boussinesq believed that such branching solutions of mechanical equations provided a way out of Laplacian determinism, giving the opportunity for life forces and individual free will to do their own thing.

But by and large, Van Strien says, such mathematical anomalies were not taken as indications of something real: in cases where solutions to equations of motion were nonunique, one just needed to pick the physical solution and discard the unphysical ones. This is probably why these earlier discoveries did not make much of an impression at the time and have since been partly forgotten, so that Norton's paper, when it came out, caused a bit of a scandal.

From my own modest experience, such attitudes towards mathematical models still prevail, at least in traditional scientific education and practice. It is not uncommon for a model or a mathematical technique to produce an unphysical artefact, such as multiple solutions where a single solution is expected, a negative quantity where only positive quantities make sense, forces in an empty space in addition to forces in a medium, etc. Scientists and engineers mostly treat their models pragmatically, as useful tools; they don't necessarily think of them as a perfect match to the structure of the Universe. It is only when a model is regarded as fundamental that some begin to take the math really seriously - all of it, not just the pragmatically relevant parts. So that if the model turns out to be mathematically indeterministic, even in an infinitesimal and empirically inaccessible portion of its domain, this is thought to have important metaphysical implications.

Interpretations of quantum mechanics are another example of such mathematical "fundamentalism". Proponents of the Everett "many worlds" interpretation, such as cosmologist Sean Carroll, say (approvingly) that all their preferred interpretation does is "take the math seriously." Indeed, the "worlds" of the MWI are a straightforward interpretation of the branching of the quantum wavefunction. (Full disclosure: I myself am sympathetic to the MWI, to the extent to which I can understand it.)

Are "fundametalists" right? Can a really good theory give us warrant to take all of its implications seriously? -

SolarWind

234It seems to me that the formulation of the laws of nature as differential equations leaves room for several solutions. They are formulated from one point of time to the next. But there is no next point of time. Only in retrospect it can be determined whether the solution fits the differential equation. But this only plays a role in special cases like Norton's dome.

SolarWind

234It seems to me that the formulation of the laws of nature as differential equations leaves room for several solutions. They are formulated from one point of time to the next. But there is no next point of time. Only in retrospect it can be determined whether the solution fits the differential equation. But this only plays a role in special cases like Norton's dome.

Welcome to The Philosophy Forum!

Get involved in philosophical discussions about knowledge, truth, language, consciousness, science, politics, religion, logic and mathematics, art, history, and lots more. No ads, no clutter, and very little agreement — just fascinating conversations.

Categories

- Guest category

- Phil. Writing Challenge - June 2025

- The Lounge

- General Philosophy

- Metaphysics & Epistemology

- Philosophy of Mind

- Ethics

- Political Philosophy

- Philosophy of Art

- Logic & Philosophy of Mathematics

- Philosophy of Religion

- Philosophy of Science

- Philosophy of Language

- Interesting Stuff

- Politics and Current Affairs

- Humanities and Social Sciences

- Science and Technology

- Non-English Discussion

- German Discussion

- Spanish Discussion

- Learning Centre

- Resources

- Books and Papers

- Reading groups

- Questions

- Guest Speakers

- David Pearce

- Massimo Pigliucci

- Debates

- Debate Proposals

- Debate Discussion

- Feedback

- Article submissions

- About TPF

- Help

More Discussions

- Other sites we like

- Social media

- Terms of Service

- Sign In

- Created with PlushForums

- © 2026 The Philosophy Forum