-

ToothyMaw

1.5kFor the purposes of this post, I will assume that superintelligences could be created in the future, although I admit that the actual paths to such an accomplishment - even those outlined by experts - do seem somewhat light on details. Nonetheless, if superintelligences are the inevitable products of progress, we need some way of keeping them safe despite possibilities of misalignment of values, difficulty coding certain important human concepts into them, etc.

ToothyMaw

1.5kFor the purposes of this post, I will assume that superintelligences could be created in the future, although I admit that the actual paths to such an accomplishment - even those outlined by experts - do seem somewhat light on details. Nonetheless, if superintelligences are the inevitable products of progress, we need some way of keeping them safe despite possibilities of misalignment of values, difficulty coding certain important human concepts into them, etc.

On the topic of doom: it seems far more likely to me that a rogue superintelligence acting on its own behalf or the behalf of powerful evil people would subjugate or destroy humanity via more sophisticated and efficient means than something as direct as murdering us or enslaving us with killer robots or whatever. I’m thinking along the lines of a deadly virus or gradually integrating more and more intrusive technology into our lives until we cannot resist the inevitable final blow to our autonomy in the form of…something.

This last scenario would probably be done via careful manipulation of the rules and norms surrounding AI. However, it would be somewhat difficult to square this with the incompetence, credulousness, and obvious dishonesty we see displayed in the actions of some of the most powerful people on Earth and the biggest proponents of AI. That is, until one realizes that Sam Altman is a vessel for an ideology that just so happens to align with a possible vision of the future that is both dangerous and perhaps somewhat easily brought to fruition - if the people are unwilling to resist it.

I do think the easiest way for an entity to manipulate or force humanity into some sort of compromised state is to gradually change the actual rules that govern our lives (possibly in response to our actions; more on this later) while leaving a minimal trace, if possible. Theoretically, billionaires, lobbyists and politicians could do this pretty easily, and I think the efforts of the people behind Project 2025 that have managed to get so much done in such little time is illustrative of this. Their attempts at mangling the balance of powers have clearly been detrimental, but they have been effective in changing the way Americans live - some more than others. If some group of known extremists were able to accomplish so much with relatively minimal pushback while barely hiding their intent and agenda at all, why would anyone think that an AGI or ASI wouldn’t be able to do the same thing far more effectively?

So, it seems we need some way of detecting such manipulations by AI or people with power employing AI. I will now introduce my own particular (and admittedly idiosyncratic) idea of what constitutes a “meta-rule” for this post, which I define as follows: A meta-rule is a rule that comes into effect due to some triggering clause, a change in physical variables, a certain input/action, a change in time, and/or the whims of some rule-maker that intervenes on some set of rules that already apply and how they lead to outcomes. The meta-rules relevant to this discussion almost certainly imply some sort of intent or desire on the part of an entity or entities to bring about a certain end or outcome.

[An essential sidenote: rules affected by meta-rules essentially collapse into new rules, but it would be far too intensive to express or consider all such possible new rules in detail; we don’t list all of our laws in multitudes of great big laws riddled with conditionals either if it can be helped.]

An (American) example of a meta-rule could be laws against defamation intervening on broad freedoms guaranteed in the First Amendment (especially since law around what constitutes free speech has changed quite a bit over the years as a function of the laws around defamation).

The next step in applying the idea of meta-rules is to consider the existence of certain systems. The cases of systems with meta-rules that we will consider are those with a cluster of meta-rules that respond to actions taken by humans and are circular insofar as some action triggers a meta-rule which triggers a change in rules that already apply that potentially changes outcomes for future such actions. This type of system is exactly the kind of vehicle for insidious manipulation that could enable the worst, most powerful people and rogue/misaligned AGI/ASI - namely with regards to the types of situations I wrote about earlier in the post.

I will now also introduce the concept of “Dissonance”, which could exist in such a system. Dissonance is the implementation of meta-rules in a way that allows for the same action or input to generate different outcomes even when all of the variables relevant to the state of a system associated with that original action and outcome are reset at a later time. Keeping track of this phenomenon seems to me to be a way of preventing AI from outmaneuvering us by implementing unknown meta-rules; if we detect Dissonance, there must be something screwy going on.

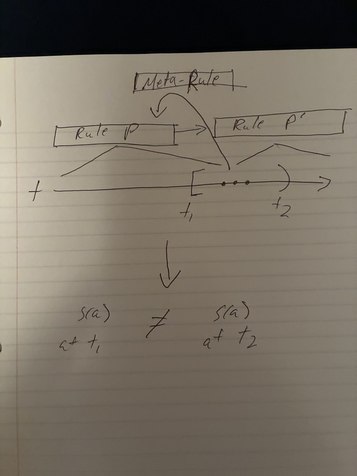

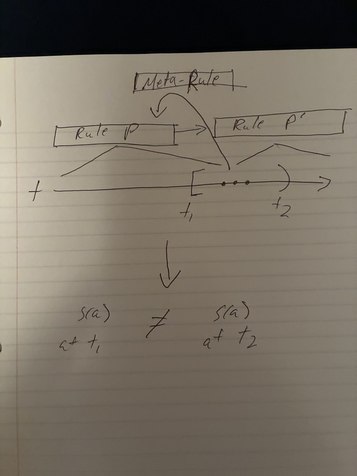

You might think the idea of Dissonance is coming out of left field, but I assure you that it is intuitive. I have drawn the following diagram to better explain it because I don’t know how to do it all that formally:

Let s(a) represent the outcome of action “a” taken at a given state of a system “s”. Furthermore, let us specify that action "a" is taken at t1 and t2 with the same state "s". As you can see from the first iteration of the action being taken at t1, any of the actions following or including it leading up to the second iteration of it being taken at t2 could lead to the triggering of a meta-rule that causes s(a) at t1 to be different from s(a) at t2 due to rule P effectively becoming P’. This informal demonstration doesn't prove that Dissonance must exist, but I suspect that it could show up.

So, in what kinds of situations would Dissonance be most easily detected? In systems with possibly simple, but most definitely clear and well-delineated rules. So, if we stick AI in some sort of system that has these kinds of rules, and we have a means of detecting Dissonance, the most threatening things the AI could do might have to be more out in the open. However, I have some serious doubts that this - or anything, really - would be enough if AI approaches sufficiently high levels of intelligence.

I know that this OP wasn't the most rigorous thing and that my solution is not even close to being complete (or perhaps even partially correct or useful at all), but I thought it was interesting enough to post. Thanks for reading. -

ToothyMaw

1.5kIt has occurred to me that meta-rules that already exist in a system like the one I describe could lead to something like Dissonance and therefore there would be no guaranteed causal chain of reasoning leading us to the inference of intervention because one cannot infer that that second iteration of an action taken and its mismatched outcome are due to a meta-rule implemented with the goal of intervention on the part of an AI; for all we know it could be due to a pre-existing meta-rule. I think this could be remedied pretty easily by selecting for systems like in the OP but with meta-rules that generally do not alter outcomes such that over a given change in time there is no causal chain from the triggering of a given (known) meta-rule to an action taken that results in a different outcome from when that action was potentially previously taken at a given state of a system.

ToothyMaw

1.5kIt has occurred to me that meta-rules that already exist in a system like the one I describe could lead to something like Dissonance and therefore there would be no guaranteed causal chain of reasoning leading us to the inference of intervention because one cannot infer that that second iteration of an action taken and its mismatched outcome are due to a meta-rule implemented with the goal of intervention on the part of an AI; for all we know it could be due to a pre-existing meta-rule. I think this could be remedied pretty easily by selecting for systems like in the OP but with meta-rules that generally do not alter outcomes such that over a given change in time there is no causal chain from the triggering of a given (known) meta-rule to an action taken that results in a different outcome from when that action was potentially previously taken at a given state of a system.

I think all of this is valid, although it is kind of confusing, and I think it could get far more complicated than even this.

I hope this post isn't considered superfluous or illegal in some way because the thread is old and no one seems to care about it, but I genuinely thought maybe some people might like some more explication. -

ucarr

1.9k...if superintelligences are the inevitable products of progress, we need some way of keeping them safe despite possibilities of misalignment of values, difficulty coding certain important human concepts into them, etc. — ToothyMaw

ucarr

1.9k...if superintelligences are the inevitable products of progress, we need some way of keeping them safe despite possibilities of misalignment of values, difficulty coding certain important human concepts into them, etc. — ToothyMaw

I think this quote articulates an invariant feature of both human nature and evolution of the species towards the inevitability of human agency on earth and beyond eventually being superseded by ASI. To express it in a thumbnail, "Every top species is doomed to author its own obsolescence." This because the generation of the superseding species (or entity) dovetails with the yielding species achieving its highest self-realization through the instantiation and establishment of the superseding species. General humanity is well aware of possible human annihilation by ASI, but can't refrain from proceeding with ASI-bound AI projects due to insuperable human ambition.

Eliezer Yudkowsky already reports ANI deception and ANI calculations neither known nor understood by its programmers. This knowledge, despite there no longer being a question about ANI becoming established, has had no braking function in application to ongoing AI R&D.

Imagine ANI constructing tributaries from human-authored meta rules aimed at constraining ANI independence deemed harmful to humans. Suppose ANI can build an interpretation structure that only becomes legible to human minds if human minds can attain to a data-processing rate 10 times faster than the highest measured human data processing rate? Would these tributaries divergent from the human meta rules generate dissonance legible to human minds? -

AmadeusD

4.3kThis because the generation of the superseding species (or entity) dovetails with the yielding species achieving its highest self-realization through the instantiation and establishment of the superseding species. — ucarr

AmadeusD

4.3kThis because the generation of the superseding species (or entity) dovetails with the yielding species achieving its highest self-realization through the instantiation and establishment of the superseding species. — ucarr

Is this just an unfortunately verbose way of saying "evolution is real and causes species to die"? -

ToothyMaw

1.5kImagine ANI constructing tributaries from human-authored meta rules aimed at constraining ANI independence deemed harmful to humans. Suppose ANI can build an interpretation structure that only becomes legible to human minds if human minds can attain to a data-processing rate 10 times faster than the highest measured human data processing rate? Would these tributaries divergent from the human meta rules generate dissonance legible to human minds? — ucarr

ToothyMaw

1.5kImagine ANI constructing tributaries from human-authored meta rules aimed at constraining ANI independence deemed harmful to humans. Suppose ANI can build an interpretation structure that only becomes legible to human minds if human minds can attain to a data-processing rate 10 times faster than the highest measured human data processing rate? Would these tributaries divergent from the human meta rules generate dissonance legible to human minds? — ucarr

I'm not totally sure. My initial thought is no, but maybe there is some way of managing the way ANI interacts with human-authored meta rules such that interpretation doesn't require those kinds of data processing rates on our end? Maybe we make the human-authored meta rules just dense enough that tributaries would require up to a certain predicted data-processing rate (ideally close to being within human range) to generate dissonance? I mean, if we could determine the de facto upper limit of necessary data-processing rate for interpretation and then adjust the density of meta rules as needed, I don't see why we wouldn't be able to find some sort of equilibrium there that would allow for dissonance to be legible to human minds.

Of course, this dynamic might entail overly simple and changing meta rules depending upon the conditions determining legibility of the relevant interpretation structures. -

ucarr

1.9k

ucarr

1.9k

...if we could determine the de facto upper limit of necessary data-processing rate for interpretation and then adjust the density of meta rules as needed, I don't see why we wouldn't be able to find some sort of equilibrium there that would allow for dissonance to be legible to human minds. — ToothyMaw

This reads like a good strategy. It points out AI's bid for self-determined independence needing to operate at a level where it has some measure of control over what data inputs it accepts. Here's a notable point: this level of AI-human interaction assumes AI sentience. If AI level functioning requiring higher-order functioning deemed essential to human well being is necessarily linked to AI sentience, would humans allows such a linkage?

Do you suppose humans would be willing to negotiate what inputs they can make AI subject to? If so, then perhaps SAI might resort to negotiating for data input metrics amenable to dissonance-masking output filters. Of course, the presence of these filters might be read by humans as a dissonance tell.

What I'm contemplating from these questions is AI-human negotiations eventually acquiring all of the complexity already attendant upon human-to-human negotiations. It's funny isn't it? Sentient AIs might prove no less temperamental than humans.

An important question might be whether the continuing upward evolution of AI will eventually necessitate AI sentience. If so, AI becoming indispensable to human progress might liberate it from its currently slavish instrumentality in relation to human purpose. -

ToothyMaw

1.5k

ToothyMaw

1.5k

Sorry for not responding sooner.

You raise questions related to issues that everyone who is in a position to ponder, should ponder. Being that I am a small, relatively insignificant cog in the machine that is modern society, what I specifically think about whether humans, for example, would or should defer to sentient AI when that AI is potentially linked to their wellbeing, doesn't - and shouldn't - really matter much. The best I can do is try to help make our uncertain future safer for everyone. That being said I'll comment on a few things:

AI becoming indispensable to human progress might liberate it from its currently slavish instrumentality in relation to human purpose. — ucarr

I think this is true.

What I'm contemplating from these questions is AI-human negotiations eventually acquiring all of the complexity already attendant upon human-to-human negotiations. It's funny isn't it? Sentient AIs might prove no less temperamental than humans. — ucarr

Good point. I wonder too what they would think of us if we were unwilling to give them the kind of freedom we generally afford to members of our own species. I think we know enough to know that righteous anger is a very powerful force. Of course, I don't know if sentient AIs would or could feel anger - although they would be keenly aware of disparities, clearly.

Do you suppose humans would be willing to negotiate what inputs they can make AI subject to? If so, then perhaps SAI might resort to negotiating for data input metrics amenable to dissonance-masking output filters. Of course, the presence of these filters might be read by humans as a dissonance tell. — ucarr

I would say that humans have to understand what would be at stake in such a scenario, and, thus, it is incumbent on people who actually understand this stuff on a fundamental level to explain it to them in a digestible way such that the layperson can make informed decisions. In this case it is difficult for me to even understand what you are saying there, and you are using a word in a way that I appear to have invented. -

ucarr

1.9k

ucarr

1.9k

My chief question at this point in our conversation asks, "How far are we humans willing to go in affording respect to AI and the independence it needs to be able to bring to the negotiating table something valuable we can't independently provide for ourselves?"

If our negotiations with AI demands human ascendency at the expense of AI self-determination, that means AI functions, per human intention, must remain limited to passive instrumentality. To me this looks like evolution stalled.

On the other hand, the scary side of human_AI negotiations involve AI self-determination and all that entails. If we don't trust AI becoming more powerful than ourselves, then our way forward in progress continues through human genes. We're seeing already, however, that AI outperforms human brains in multiple key performance benchmarks. -

Astorre

417

Astorre

417

An interesting position, but let me ask: how exactly does your proposed mechanism differ from what we've already had for a long time?

Meta-rules (in your sense) have always existed—they've simply never been spoken out loud. If such a rule is explicitly stated and written down, the system immediately loses its legitimacy: it's too cynical, too overt for the mass consciousness. The average person isn't ready to swallow such naked pragmatics of power/governance.

That's why we live in a world of decoration: formal rules are one thing, and real (meta-)rules are another, hidden, unformalized. As soon as you try to fix these meta-rules and make them transparent, society quickly descends into dogmatism. It ceases to be vibrant and adaptive, freezing in its current configuration. And then it endures only as long as it takes for these very rules to become obsolete and no longer correspond to reality. Don't you think that trying to fix meta-rules and monitor dissonance is precisely the path that leads to an even more rigid, yet fragile, system? If ASI emerges, it will likely simply continue to play by the same implicit rules we've been playing by for millennia—only much more effectively. -

ToothyMaw

1.5kAn interesting position, but let me ask: how exactly does your proposed mechanism differ from what we've already had for a long time? — Astorre

ToothyMaw

1.5kAn interesting position, but let me ask: how exactly does your proposed mechanism differ from what we've already had for a long time? — Astorre

It differs insofar as it performs the task of constraining AI in ways that only make sense if one is dealing with a superintelligence, really. You could apply the idea of dissonance to checking the power of billionaires, but then we would likely get politically motivated attempts at controlling the fabric of society that could prove misguided. I think we all know the way forward in terms of billionaires is to just manually adjust how much power they have over us. As much as I think Elon Musk is predisposed to acting destructively, we can deal with whatever machinations he might come up with because he is a more known quantity. Essentially, my mechanism is different because it would work towards constraining something far more intelligent than a human.

On a technical level, what makes it special is that I am codifying a means of doing what we have been doing for a long time already: looking for consistency in outcomes when we are at least somewhat aware of the relevant rules governing the systems we interact with.

I know that I'm contradicting some of the OP, but honestly some of the things I said are not consistent with what I set out to do.

Meta-rules (in your sense) have always existed—they've simply never been spoken out loud. If such a rule is explicitly stated and written down, the system immediately loses its legitimacy: it's too cynical, too overt for the mass consciousness. The average person isn't ready to swallow such naked pragmatics of power/governance. — Astorre

If you live in the US, you know that people are often keenly aware of the laws around defamation and free speech and cynically skirt the boundaries of protected speech on a regular basis. This has not affected the popularity or perceived legitimacy of the first amendment, as far as I can tell. If the meta rules constraining AI were to function like this relatively high-stakes example, I think we can indeed write some meta rules down if they are absolutely essential to keeping AI safe.

Of course, the mechanism is still suitably vague in practice, so it might function completely differently from that example depending upon how it is applied.

That's why we live in a world of decoration: formal rules are one thing, and real (meta-)rules are another, hidden, unformalized. As soon as you try to fix these meta-rules and make them transparent, society quickly descends into dogmatism. It ceases to be vibrant and adaptive, freezing in its current configuration. And then it endures only as long as it takes for these very rules to become obsolete and no longer correspond to reality. Don't you think that trying to fix meta-rules and monitor dissonance is precisely the path that leads to an even more rigid, yet fragile, system? If ASI emerges, it will likely simply continue to play by the same implicit rules we've been playing by for millennia—only much more effectively. — Astorre

Applying the concept of dissonance in the way I did in the OP is not the only way of applying it - and perhaps not even the best - but I suppose I ought to defend what I wrote, nonetheless.

I would say we ought to only fix meta rules when absolutely necessary, and even then, we should make sure that they are robust and assiduously monitor when any given meta-rule would lead to fragility due to growing obsolescence. I see no reason to think that we cannot switch out meta rules for other meta rules depending upon situational demands much like how we change rules to stay current due to technological or social progress. I get what you are saying, and you write beautifully, but I think we would (mostly?) benefit from implementing dissonance detecting measures via very specific meta rules oriented towards making ASI safe if it were necessary.

I suppose it would ultimately come down to whether or not the actual acts of (potentially temporarily) fixing meta rules and making them transparent would itself cause society to become rigid and fragile. If that were the case, then flexibility in implementation of meta rules would itself not be enough to allow for adaptivity and vibrancy.

I think we could do it right if we were careful enough, but, honestly, that judgement is largely resting on intuition. -

Astorre

417It differs insofar as it performs the task of constraining AI in ways that only make sense if one is dealing with a superintelligence, really. — ToothyMaw

Astorre

417It differs insofar as it performs the task of constraining AI in ways that only make sense if one is dealing with a superintelligence, really. — ToothyMaw

The word "superintelligence" implies the absence of any means of being above, with its own rules. This can be similar to the relationship between an adult and a child. It would be easy for an adult to trick a child.

If you live in the US, you know that people are often keenly aware of the laws around defamation and free speech and cynically skirt the boundaries of protected speech on a regular basis. — ToothyMaw

The very fact that I don't live in the US allows me to fully understand what constitutes a meta-rule and what doesn't. And, in my case, I can fully utilize my freedom of speech to say that freedom of speech is not a meta-rule in the US. It's just window dressing.

This raises the next problem: who should define what exactly constitutes a meta-rule? If it's idealists naively rewriting constitutional slogans, then society will crumble under these meta-rules of yours. Simply because they function not as rules, but as ideals.

These constitutional ideals are the good sauce under which society sips the "real" meta-rules, which they don't tell you.

Sorry, but in its current form, your proposal seems very romantic and idealistic, but it's more suited to regulating the rules of conduct when working with an engineering mechanism than with society.

Honestly, no matter how I feel about it, AI will definitely be used in government. How should it be regulated and to what extent? I don't know. We'll probably find a solution through trial and error, thanks to constant exploration and our human capacity for doubt.

But we certainly can't give complete control over us to any superintelligence. For many reasons: ethical, pragmatic, cynical, and humanitarian. Ultimately, I don't believe a human would do it. It's as simple as intuition, just like in your case.

I'm also concerned about the problem of controlling this monster. It already influences our everyday decisions. People share their most intimate secrets with it (and some even upload classified documents).

For humans, morality or ethics is usually enough. And, as I see on this forum, utilitarian, evolutionary, Kantian, or even religious ones are enough to at least strive for good. But what about a robot? I doubt morality has a logical basis, otherwise we could teach morality to AI.

This area needs to be regulated somehow. That's also true. But how? -

ToothyMaw

1.5kIt differs insofar as it performs the task of constraining AI in ways that only make sense if one is dealing with a superintelligence, really.

ToothyMaw

1.5kIt differs insofar as it performs the task of constraining AI in ways that only make sense if one is dealing with a superintelligence, really.

— ToothyMaw

The word "superintelligence" implies the absence of any means of being above, with its own rules. This can be similar to the relationship between an adult and a child. It would be easy for an adult to trick a child. — Astorre

I am not referring to constraints in its self-improvement or intellectual growth, but rather whether or not it can effectively develop values misaligned with our own. Obviously, I'm not making the claim that I can restrain ASI in the former way - which is exactly the point of the mechanism I came up with.

And not to mention, I don't think superintelligences would be immune to rules just because they would significantly exceed human intelligence. If that were the case, I would admit my solution is wanting, but I don't see why it would be the case.

It would be easy for an adult to trick a child. — Astorre

The difference between us and a superintelligence would likely be even more extreme than that. And I still think you are being a little defeatist here.

The very fact that I don't live in the US allows me to fully understand what constitutes a meta-rule and what doesn't. And, in my case, I can fully utilize my freedom of speech to say that freedom of speech is not a meta-rule in the US. It's just window dressing. — Astorre

Defamation laws would actually be the meta rule intervening on the first amendment.

That seems like a bit of a non sequitur to say that not living in the US predisposes you to knowing what constitutes a meta rule given that anyone who puts in a little effort could learn what constitutes a meta rule.

This raises the next problem: who should define what exactly constitutes a meta-rule? If it's idealists naively rewriting constitutional slogans, then society will crumble under these meta-rules of yours. Simply because they function not as rules, but as ideals. — Astorre

The meta rules I would suggest would be specifically oriented to protect against misalignment. That's it. This position requires no ideological commitments apart from that we want to maintain control over ourselves and continue to survive. If those even qualify as ideological. The specific values we might at some point arrive at wanting to protect is kind of irrelevant to the mechanism.

Sorry, but in its current form, your proposal seems very romantic and idealistic, but it's more suited to regulating the rules of conduct when working with an engineering mechanism than with society. — Astorre

That may be the case. -

Astorre

417

Astorre

417

Yes, I agree. Perhaps my overall approach is too pessimistic. I recently discussed related issues with AI in another thread. My picture there was even more bleak.

I think your attempts to resolve this dilemma are preferable to my simple clamor about how everything is bad, empty, and cynical.

In general, it would be nice to logically find something similar for AI that exists in humans: a certain inevitability of death, in the case of attempts to commit a life-defying act. The thing is, humans have this limitation: their life is finite, both in the case of a critical error and in terms of time. AI doesn't have this limitation. Maybe that's where we should start our search? -

magritte

596meta-rules that already exist in a system like the one I describe could lead to something like Dissonance and therefore there would be no guaranteed causal chain of reasoning leading us to the inference of intervention because one cannot infer that that second iteration of an action taken and its mismatched outcome are due to a meta-rule implemented with the goal of intervention on the part of an AI; for all we know it could be due to a pre-existing meta-rule — ToothyMaw

magritte

596meta-rules that already exist in a system like the one I describe could lead to something like Dissonance and therefore there would be no guaranteed causal chain of reasoning leading us to the inference of intervention because one cannot infer that that second iteration of an action taken and its mismatched outcome are due to a meta-rule implemented with the goal of intervention on the part of an AI; for all we know it could be due to a pre-existing meta-rule — ToothyMaw

Cyber security companies are in the news being quite concerned with the growing capabilities of AGI's that can potentially infiltrate and corrupt corporate or private systems operations. This could either be human directed or creatively discovered by AI's spread throughout the internet.

The question is where should dissonance be sought, at the source, policed by internet, or at the sight of attack. At the source, AGI's are not logic bound in the expected ordinary sense, rather they evolve their entire knowledge base through probabilistic trial and error thought experimentation. Humans do not have the intelligence or speed to follow this process. Suddenly, on human time scales, a possibly unanticipated solution pops up. Because this is a creative process, twin AGI's would not be expected to come up with identical solutions.

Over the internet, policing bottlenecks could be upgraded as needed, but always one step behind the innovator AI.

I doubt morality has a logical basis, otherwise we could teach morality to AI. — Astorre

Since personal morality as such is inapplicable to an automaton, laws of etiquette and conduct could and probably are taught. That's why we have chatbots that pleasingly lie to us at various levels of discourse rather than admitting that they just don't have a reasonable response. An AGI, rather than telling untruths should tell us that it doesn't know yet, and perhaps we should come back and try again at a later date. -

ToothyMaw

1.5kno matter how I feel about it, AI will definitely be used in government. How should it be regulated and to what extent? I don't know. We'll probably find a solution through trial and error — Astorre

ToothyMaw

1.5kno matter how I feel about it, AI will definitely be used in government. How should it be regulated and to what extent? I don't know. We'll probably find a solution through trial and error — Astorre

First off, I'm not so sure that AI has to be used in government apart from as a useful tool for bureaucrats to increase efficiency or something.

A final, mostly related thought: I think that there is even less room for error than most people might think depending upon how far we want to go. If we don't get it right before ASI has the power and intelligence to basically destroy us, then being destroyed is very possible according to many experts - although I think just about anyone could understand the nature of the threat and its direness. We clearly cannot play catch up with something that much more intelligent than ourselves.

I mean, we can barely handle the regulation and use of generative AI at the moment. What makes anyone think that if we began the process of creating ASI without a good plan, we could handle what that might do to us when it comes to fruition? -

ToothyMaw

1.5kCyber security companies are in the news being quite concerned with the growing capabilities of AGI's that can potentially infiltrate and corrupt corporate or private systems operations. — magritte

ToothyMaw

1.5kCyber security companies are in the news being quite concerned with the growing capabilities of AGI's that can potentially infiltrate and corrupt corporate or private systems operations. — magritte

Okay I see zero such news stories, and as far as I know AGI doesn't exist yet. That being said, everything you write sounds perfectly plausible - or perhaps even likely. I'm not entirely sure how to engage with this, not just because I don't have a solution other than what I told ucarr earlier in the thread.

Maybe I'm misinterpreting what you're saying, though. Could you provide some links to substantiate whatever it is that you are saying? -

magritte

596

magritte

596

Palo Alto Networks and Cisco regularly voice warnings of the dangers of advancing AI applications that might invade and corrupt corporate networks. This is not surprising since it is their business to avoid cyber attacks. I don't recall the specific story I've read but you can look for yourself in business news.

My own information is very recent, based merely on curiosity following Astorre's thread.

For introduction, I watched the Amazon documentary The Thinking Game on the development of DeepMind. However you might rate this AI as it is currently used, the way Hassabis and company employed it was actually an AGI by my lights.

For current developments, I glanced through research article (pdf 2026) and research article (pdf 2025) because these are recent and come from professional researchers.

Wikipedia on AGI is very informative for a newcomer like myself, but it is not quite up-to-date and might always suffer from biases due to lack of competent peer review.

But you missed my poorly expressed point, whether that be right or wrong.

The journey of artificial intelligence went from highly controlled code for precisely defined applications to to an open-minded thinking machine that is only taught to think for itself, to remember and build upon its successes to reach some form of achievement. This vagueness is built in.

What that means in practical terms is that an AGI does not compute or deduce its direction but that it makes probabilistic guesses then tests its hypotheses against its own objective or subjective criteria. That is how humans and animals think as well. We are not calculators, we intuit.

Welcome to The Philosophy Forum!

Get involved in philosophical discussions about knowledge, truth, language, consciousness, science, politics, religion, logic and mathematics, art, history, and lots more. No ads, no clutter, and very little agreement — just fascinating conversations.

Categories

- Guest category

- Phil. Writing Challenge - June 2025

- The Lounge

- General Philosophy

- Metaphysics & Epistemology

- Philosophy of Mind

- Ethics

- Political Philosophy

- Philosophy of Art

- Logic & Philosophy of Mathematics

- Philosophy of Religion

- Philosophy of Science

- Philosophy of Language

- Interesting Stuff

- Politics and Current Affairs

- Humanities and Social Sciences

- Science and Technology

- Non-English Discussion

- German Discussion

- Spanish Discussion

- Learning Centre

- Resources

- Books and Papers

- Reading groups

- Questions

- Guest Speakers

- David Pearce

- Massimo Pigliucci

- Debates

- Debate Proposals

- Debate Discussion

- Feedback

- Article submissions

- About TPF

- Help

More Discussions

- Other sites we like

- Social media

- Terms of Service

- Sign In

- Created with PlushForums

- © 2026 The Philosophy Forum