-

frank

19kLooking for information on identifying fake news and Russian bullshit and understanding the purpose behind it. For instance: why is Russia supporting Sanders?

frank

19kLooking for information on identifying fake news and Russian bullshit and understanding the purpose behind it. For instance: why is Russia supporting Sanders?

This was published a while back, but it's about Finnish efforts to educate their public about fake news. Some of the ideas include looking for stock photos, a lack of personal info.

Stopfake specializes in revealing Russian fake news about Ukraine.

Any additions would be appreciated. -

Relativist

3.6k

Relativist

3.6k

Interesting article. What I'm struck by is the environment Trump has encouraged, by labeling real news as "fake", and sometimes retweeting what is actually fake news.This was published a while back, — frank

To your point, I think fact checkers are the most convenient tool. -

frank

19kInteresting article. What I'm struck by is the environment Trump has encouraged, by labeling real news as "fake", and sometimes retweeting what is actually fake news. — Relativist

frank

19kInteresting article. What I'm struck by is the environment Trump has encouraged, by labeling real news as "fake", and sometimes retweeting what is actually fake news. — Relativist

Why is he such an idiot?

To your point, I think fact checkers are the most convenient tool. — Relativist

I was looking for info on how to spot intentional misinformation. But yes, fact checking helps. -

Echarmion

2.7kThe “Russian misinformation” canard is itself misinformation. Have you ever seen a single piece of Russian misinformation? Worse, this canard is being used to justify seizing control of social media. — NOS4A2

Echarmion

2.7kThe “Russian misinformation” canard is itself misinformation. Have you ever seen a single piece of Russian misinformation? Worse, this canard is being used to justify seizing control of social media. — NOS4A2

Well he just needs to look at your post history to see plenty examples.

Looking for information on identifying fake news and Russian bullshit and understanding the purpose behind it. For instance: why is Russia supporting Sanders? — frank

I am not sure there is much one can do on a reasonable time budget other than checking trustworthy fact checkers. Wikipedia, if you also look at the talk page, can at least make you aware of contentious issues.

There is also the old saying: to figure out the party behind an obscure plot, consider who benefits. -

frank

19kThe “Russian misinformation” canard is itself misinformation. Have you ever seen a single piece of Russian misinformation? Worse, this canard is being used to justify seizing control of social media. — NOS4A2

frank

19kThe “Russian misinformation” canard is itself misinformation. Have you ever seen a single piece of Russian misinformation? Worse, this canard is being used to justify seizing control of social media. — NOS4A2

I've been searching for "how to identify a Russian troll."

You fit the bill. You intentionally put up false information, which is line with Russia's historic goal of just deluging the internet with false stories in order to create a kind of fog. It becomes harder to identify the truth.

This is info from Time magazine. -

NOS4A2

10.2k

NOS4A2

10.2k

I've been searching for "how to identify a Russian troll."

You fit the bill. You intentionally put up false information, which is line with Russia's historic goal of just deluging the internet with false stories in order to create a kind of fog. It becomes harder to identify the truth.

This is info from Time magazine.

All it takes is one useful idiot to spread misinformation, as you’re doing now. The difference is I don’t need any resource beyond simple reason to teach me what’s true or false. -

frank

19kThere is also the old saying: to figure out the party behind an obscure plot, consider who benefits. — Echarmion

frank

19kThere is also the old saying: to figure out the party behind an obscure plot, consider who benefits. — Echarmion

Lenin? My plan for this thread is to

1. Explore reasons for Russia's choices.

2. Share ideas on how to resist divisiveness.

3. Show examples of known trolls.

So, yes, fact checkers are great, but there is so much misinformation (per what ive read so far) that i want to learn how to spot them. -

NOS4A2

10.2k

NOS4A2

10.2k

Anyway, if you aren't a Russian troll, you're basically doing the same kind of work.

You’re one of many self-proclaimed philosophers on this forum who believe I am a Russian troll without evidence. That’s how easy it is to adopt a lie and use it as a basis in your thought process. No resource will protect you from that kind of credulity. -

Echarmion

2.7k1. Explore reasons for Russia's choices. — frank

Echarmion

2.7k1. Explore reasons for Russia's choices. — frank

To do that effectively, I think the first step would be to learn a bit about russian history, especially the transition from sovjet rule to Putin. I myself know little, but there are some obvious things like how dangerous Nato seems to many russians and how badly their first experiences with western capitalism and democracy went that many people don't consider.

Apart from that, I think the actualy strategy is fairly simple. Russia uses a mixture of direct military intervention, economic incentives an disinformation halt the advance of the EU and NATO, create a new system of client states around Russia, and regain superpower status. A weakened US is important for all of these.

2. Share ideas on how to resist divisiveness. — frank

Difficult. Technically, the best way would probably be talk to people personally, but that's not really feasible on a large scale. I think I remember that according to some early studies done, correcting the misinformation that trolls spread is at least helpful.

3. Show examples of known trolls. — frank

I guess you have already spotted one. Though I don't think spotting trolls is usually the problem. The troll doesn't rely on not being spotted by the "regulars". Their job is to create fake debates and insecurity that will prevent passers-by, who may just have done some google search, from discovering "untainted" sources of information.

You’re one of many self-proclaimed philosophers on this forum who believe I am a Russian troll without evidence — NOS4A2

Actually, Nosfertau, there is tons of evidence. You have 2,5k posts, and each and every one of them is a piece of evidence for you character and intentions. -

Relativist

3.6khe “Russian misinformation” canard is itself misinformation. Have you ever seen a single piece of Russian misinformation? — NOS4A2

Relativist

3.6khe “Russian misinformation” canard is itself misinformation. Have you ever seen a single piece of Russian misinformation? — NOS4A2

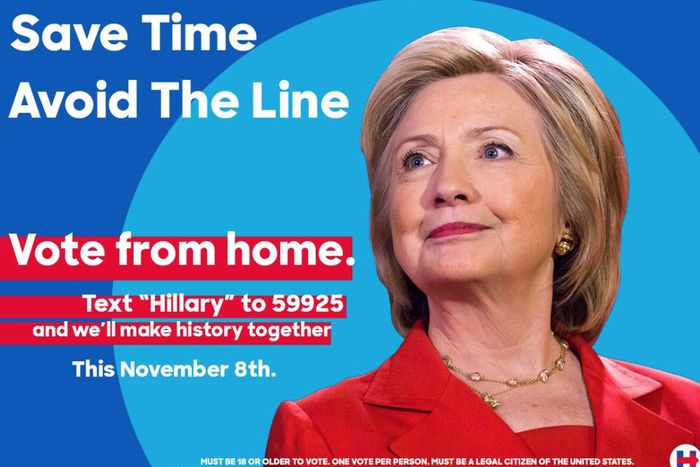

-

NOS4A2

10.2k

NOS4A2

10.2k

Actually, Nosfertau, there is tons of evidence. You have 2,5k posts, and each and every one of them is a piece of evidence for you character and intentions.

See what I mean? Your evidence is my post count. -

NOS4A2

10.2k

NOS4A2

10.2k -

alcontali

1.3kFinnish efforts to educate their public about fake news — frank

alcontali

1.3kFinnish efforts to educate their public about fake news — frank

It is the fake news itself that will educate the public on fake news.

That is actually an excellent thing.

After 70 years of television culture and unwarranted trust in the caste of professional liars, generalized skepticism and a deeply ingrained, knee-jerk reaction of seeking to corroborate and verify were long overdue.

The public will automatically apply what they have learned to the official narrative of the ruling elite, i.e. the worst fake news of all.

In that sense, fake news is a Godsent.

It is a Good Thing (tm) that there is no longer a government-owned monopoly on lying to the public.

We need definitely more fake news and not less, and we certainly need much more competition in the fake-news marketplace. -

Relativist

3.6kI found it with google. You'll find this, and similar ads as examples of Russian disinformation that are referenced in many articles about the topic. Congress also posted a resource that lists a number of additional examples of Russian disinformation. Ads like this would pop up in facebook for targeted groups of people. You didn't see it because you weren't in a targeted group. Blacks and Latinos were targeted (there was also a Spanish version).

Relativist

3.6kI found it with google. You'll find this, and similar ads as examples of Russian disinformation that are referenced in many articles about the topic. Congress also posted a resource that lists a number of additional examples of Russian disinformation. Ads like this would pop up in facebook for targeted groups of people. You didn't see it because you weren't in a targeted group. Blacks and Latinos were targeted (there was also a Spanish version). -

fdrake

7.2kAfter 70 years of television culture and unwarranted trust in the caste of professional liars, generalized skepticism and a deeply ingrained, knee-jerk reaction of seeking to corroborate and verify were long overdue. — alcontali

fdrake

7.2kAfter 70 years of television culture and unwarranted trust in the caste of professional liars, generalized skepticism and a deeply ingrained, knee-jerk reaction of seeking to corroborate and verify were long overdue. — alcontali

Generalised skepticism is useful for misinformation. If you're extremely skeptical of everything, you come to believe what you believe through what you're exposed to and saturated with, and that exposure is manipulable - like the unholy union between targeted advertising and political campaigning. You won't realise how your beliefs are shifting until you're a dogmatic Muslim living somewhere off grid subsisting solely off of Bitcoin, or shouting "There's a fine line between eugenics and racism!" at an Turkish looking bloke in a bar, or believing that women are inherently whores and that you are unworthy of sexual intimacy because of the shape of your skull. All the while saying: it's those bloody normies that are so duped, take the red pill, duh.

I think the only effective means of combatting this crap from influencing you (as much as possible anyway) is doing what you can to curate your exposure to it. Expose yourself to news media that reacts more slowly, cites sources that have a good reputation and have independent journalism/research collation as a component (remember that stuff you get taught in school about primary, secondary and tertiary sources? Yeah, don't forget that). Getting news from established news sources rather than social media is a start, doing a mental exercise of noticing what website you're reading from (Do you recognise it? What sources does it cite for its information?).

Worse, this canard is being used to justify seizing control of social media. — NOS4A2

Which is great, really, the kind of measures that Facebook and Twitter took against ISIS were extremely effective at neutering their penetration in their platforms. The companies which own social media are in an extremely privileged place of control regarding information exposure, which affords them a great opportunity to cut the influence of organised disinformation and propaganda.

I was looking for info on how to spot intentional misinformation. But yes, fact checking helps. — frank

Don't have any resources for specifically Russian trolls, but the Daily Stormer published a guide on how to draw people into Naziism through social and news media savvy, the Huffington Post reported on it.

There was also an (alleged) FBI document circulating around leftist internet circles around 2009 which was a style guide for internet trolling to disrupt political online spaces. I've spent an hour or so trying to find it but can't.

If I remember right, it has a similar emphasis on simultaneously derailing discussions and innocuously deciding the rails which follow. @NOS4A2's posts are quite a lot like this; I like to imagine that they're actually a highly sophisticated troll that presents all of their misapprehensions as lures. If you start correcting their crap, they've already decided the terms of the discussion. If this plays out on social media, the overall discussion topic is what gets remembered (the lie, the misapprehension), and the impetus to correct it just carries the message further.

With that in mind, a pattern of shortly written posts which are clearly factional in a dispute, aim to be divisive, repeat known misinformation and attempt to discredit everything which doesn't fit in with the assumed frame (or rely lazily upon such a frame), and have an uncanny ability to derail discussions are good indicators that a person is trolling intentionally for some purpose. The aim is to saturate the space with their views rather than argue for them.

Of course, being the internet, it's extremely difficult to tell a troll from a quick to react advocate of a position. -

NOS4A2

10.2k

NOS4A2

10.2k

Which is great, really, the kind of measures that Facebook and Twitter took against ISIS were extremely effective at neutering their penetration in their platforms. The companies which own social media are in an extremely privileged place of control regarding information exposure, which affords them a great opportunity to cut the influence of organised disinformation and propaganda.

I think it’s a bad idea to have any sort of centralized curation of information, let alone some committee of experts or commissars picking and choosing what info we are allowed to see. It reeks to me of state-sanctioned truth and censorship when a government threatens Facebook or Twitter to do more to tackle misinformation.

An unregulated social media leads to the distortion of truth (propaganda, misinformation), sure, but the regulation of information leads to the distortion of truth and it’s suppression. So I’d err on the side of an unregulated social media because suppression is absolute and mere distortion can always be rectified through democratic means. The freedom to access and impart information is too precious to hand over to some Silicon Valley pencil neck or unelected bureaucrat. -

fdrake

7.2kI think it’s a bad idea to have any sort of centralized curation of information, let alone some committee of experts or commissars picking and choosing what info we are allowed to see. — NOS4A2

fdrake

7.2kI think it’s a bad idea to have any sort of centralized curation of information, let alone some committee of experts or commissars picking and choosing what info we are allowed to see. — NOS4A2

The algorithms already do that by themselves. There's just so much content available that it makes sense, in terms of generating revenue and keeping users on site, to curate exposure. Some smart casual commissar already decides how we get shown what we get shown, and we need laws to protect the "free marketplace of ideas" from its worst impulses, habits and cooptions. -

alcontali

1.3kYou won't realise how your beliefs are shifting until you're a dogmatic Muslim living somewhere off grid subsisting solely off of Bitcoin — fdrake

alcontali

1.3kYou won't realise how your beliefs are shifting until you're a dogmatic Muslim living somewhere off grid subsisting solely off of Bitcoin — fdrake

Islam is something that I downloaded from the internet, just like my current linux distro and my bitcoin wallet. I wasn't born with any of that. I may now be in the middle of SE Asia but it is not particularly off-grid. I currently live in a condo building with swimming pool, gym, massage, and other amenities. I'm getting a lot for not much money, actually. Furthermore, it's rather in the suburbs of a larger city.

I'm looking to run into more downloads. It's good stuff! Last time, I literally downloaded real money!

you are unworthy of sexual intimacy because of the shape of your skull — fdrake

In my impression, the local version here would rather think "because of the insufficient amount of money in your wallet" but I guess that they will not openly admit that -- not in my experience -- and certainly not publicly. The theatre will rather be about "feelings that just aren't there". Ha aha ha!

But then again, I seem to sufficiently exceed local norms. So, we are more talking about theoretical considerations than anything truly practical. By the way, why would anybody move to a place on the globe where that kind of things turn out to work less well for them than before? In the end, it is all about supply and demand. It's actually fun to talk about that in localese language. The better you can do that, the more silly things you will get to hear. Pick up a foreign dialect and you will be amazed!

I think the only effective means of combatting this crap from influencing you (as much as possible anyway) is doing what you can to curate your exposure to it. — fdrake

One would first have to be interested in the information!

There are exceptions, but I am probably not. I'd rather dig for interesting downloads.

For example, I was Googling for "arithmetic set" and then I ran into a search result that mentions "the Coven-Meyerowitz Conjecture". What the hell would that be? It probably doesn't matter, but it sounds intriguingly nondescript:

The Coven-Meyerowitz Conjecture states that being a tile is equivalent to a purely arithmetic condition on the set. — Arithmetic Sets in Groups by AZER AKHMEDOV and DAMIANO FULGHESU

Googling for nothing in particular is also how I originally ran into Bitcoin. Still, maybe the search term "arithmetic set" is just not worth it. I could even tire of it already tomorrow, or the day after that, because that happens all the time. -

frank

19kI think the only effective means of combatting this crap from influencing you (as much as possible anyway) is doing what you can to curate your exposure to it. Expose yourself to news media that reacts more slowly, cites sources that have a good reputation and have independent journalism/research collation as a component (remember that stuff you get taught in school about primary, secondary and tertiary sources? Yeah, don't forget that). Getting news from established news sources rather than social media is a start, doing a mental exercise of noticing what website you're reading from (Do you recognise it? What sources does it cite for its information?). — fdrake

frank

19kI think the only effective means of combatting this crap from influencing you (as much as possible anyway) is doing what you can to curate your exposure to it. Expose yourself to news media that reacts more slowly, cites sources that have a good reputation and have independent journalism/research collation as a component (remember that stuff you get taught in school about primary, secondary and tertiary sources? Yeah, don't forget that). Getting news from established news sources rather than social media is a start, doing a mental exercise of noticing what website you're reading from (Do you recognise it? What sources does it cite for its information?). — fdrake

This is awesome advice. -

frank

19kI guess you have already spotted one. Though I don't think spotting trolls is usually the problem. The troll doesn't rely on not being spotted by the "regulars". Their job is to create fake debates and insecurity that will prevent passers-by, who may just have done some google search, from discovering "untainted" sources of information. — Echarmion

frank

19kI guess you have already spotted one. Though I don't think spotting trolls is usually the problem. The troll doesn't rely on not being spotted by the "regulars". Their job is to create fake debates and insecurity that will prevent passers-by, who may just have done some google search, from discovering "untainted" sources of information. — Echarmion

Could you explain this a little more? So their goal is to just continuously pump out misinformation and stir up distrust? So, for instance, since we've spotted Nosferatu, we might start suspecting other people?

How does it affect passers by? -

Echarmion

2.7kCould you explain this a little more? So their goal is to just continuously pump out misinformation and stir up distrust? So, for instance, since we've spotted Nosferatu, we might start suspecting other people?

Echarmion

2.7kCould you explain this a little more? So their goal is to just continuously pump out misinformation and stir up distrust? So, for instance, since we've spotted Nosferatu, we might start suspecting other people?

How does it affect passers by? — frank

Well if I think about what a troll is doing here, of all places, my conclusion is this: The philosophy forum probably ranks fairly highly on google searches for philosophy, in general. It has a pretty large and active thread named "Donald Trump". So someone looking up something concerning Donald Trump, and maybe the word "philosophy" might end up here. And since almost everyone here is highly critical of Trump, they'd normally find a fairly undivided message: A bunch criticizing Trump and his decisions, and noting possible negative consequences etc.

Now, with our vampiric friend, what they'd instead find is a lively "debate", where every post critical of Trump is followed by a Trump talking point. If someone is already inclined towards a certain position, they can now pick and choose whatever they like. And if someone is inclined to doubt this story or that, they can find confirmation. -

Tzeentch

4.4kThe average person is being bombarded with so much fake news, it's impossible to identify it all.

Tzeentch

4.4kThe average person is being bombarded with so much fake news, it's impossible to identify it all.

Your best bet is to be highly skeptical of anything the news tells you, and to automatically assume the situation is more complicated and nuanced than whatever easily digestible answers the news is trying to sell you. -

fdrake

7.2kHow does it affect passers by? — frank

fdrake

7.2kHow does it affect passers by? — frank

Maybe a good "natural experiment" on this is to look at attitudes towards climate change. It's

A

(1a) settled that anthropogenic climate change is real

(2a) settled that the differences arising in the climate from our actions are relatively large

(3a) settled that we're already feeling these effects in social problems and extreme weather rates

But in discussions about it you see:

B

(1b) Attempts to discredit the sheer mountain of evidence of the reality of anthropogenic climate change by focussing on single (usually misattributed) study flaws or counter-variation (like the El Nino cycle) or erroneous claims.

(2b and 3b) Attempts to discredit claims that the difference caused by humans on the climate is large by focussing on "so it's an apocalypse now eh? What about the Mayans and the Millenium Bug!" like claims; false equivocations and hyperbole, then denying it's hyperbole. Looking at information in a highly decontextualised manner ("This part of the Greenland ice sheet has been growing this year!") to refute a well established general claim. "Who cares if this island people have lost most of their land from sea level rises? It's an isolated case!" (ignoring literature on climate refugees)

If you imagine an observer coming into a social media discussion, they'll see supporters of settled science A speaking on a level playing field with supporters of lobbying derived bullshit B. Usually what's happened appears to be a clickbait article about climate change has a bunch of falsehoods in it, skeptical individuals will realise that the article is clickbait and remain unconvinced, but precisely the same thing holds for reporting on well researched science.

Part of the effect is to create, as you put it, a fog where people can't tell true from false because they are equally skeptical of all claims. In a situation where someone's belief is not driven by contact with well reasoned argument and evidence, emotional and discursive factors play a bigger part in informing and maintaining belief; what's true becomes little more than what is presumed in what media you expose yourself to.

Another part of the effect relates to derailing - or controlling the conversation - making claims that lure people into responding and thus increasing the "broadcast strength"of the original message if the responses explicitly correct it and react to it rather than providing their own narrative. Even if everyone who responds to it disagrees with it. The "lure" works by exposing skeptical or hitherto unexposed people to a claim, or a framing context for a claim, in a situation of heated debate; so when you just "skim over it", you see good points from both sides, but one person (like @NOS4A2) is controlling the flow of conversation - what topics get brought up in what way, and what easy refutations there are for them. It's to the benefit of a position's exposure to be obviously wrong or implausible given an informed perspective and divisive between people who are uninformed (read: skeptical in the usual "the news is so biased now" sense) on a matter; that's good misinformation, it makes waves, it goes viral, it frames discourse, it creates bottlenecks of inquiry through saturation (want to know about X? well guess what, it's similar to Y on the internet - now you know about Y).

The major distinguishing feature, I think, between intentional misinformation and just being misinformed is that intentional misinformation about X is designed to intervene on how the discourse surrounding X works, not to work within it to assess and challenge claims. Being dispassionately reasonable about an issue when engaging someone who is intentionally misinforming can still help them saturate the medium with their message, or the presumptions underlying it.

The role that presumptions play is easier to see in talking about trans issues, a recent popular issue was "should trans women be allowed to compete in women's sports?"; which is a neatly packaged clusterfuck overlapping the question of the distinction of natal sex and gender, the possible effects of bodily differences between trans women and cis women, and sports fairness intuitions. Misinformation is designed to make waves and push the exposed into a desired position; through divisive or occlusive framing, through taking a lot longer to correct than to state, and to appeal to the ultimately aesthetic and heuristic components of belief formation than the logical or analytic ones. -

frank

19kNow, with our vampiric friend, what they'd instead find is a lively "debate", — Echarmion

frank

19kNow, with our vampiric friend, what they'd instead find is a lively "debate", — Echarmion

If you imagine an observer coming into a social media discussion, they'll see supporters of settled science A speaking on a level playing field with supporters of lobbying derived bullshit B. — fdrake

So the goal isn't necessarily to convince anyone of the misinformation, it's just to generate debate which gives the impression that the matter isn't settled. For instance, if a person drops into this thread and sees that we're debating whether or not there is any Russian interference in American elections, then it helps picture Trump as being on one side of a debate, not just a bold-faced liar.

And it is really weird to have the POTUS just tossing out obviously false information. It's bizzare and hard to accept in the first place. There's emotion that's opposed to the truth in this case. Cut the ties to the facts, and emotion can do what it wants to do.

So the Russian troll is an agent of skepticism. That's fascinating. -

fdrake

7.2kAnd it is really weird to have the POTUS just tossing out obviously false information. It's bizzare and hard to accept in the first place. There's emotion that's opposed to the truth in this case. Cut the ties to the facts, and emotion can do what it wants to do — frank

fdrake

7.2kAnd it is really weird to have the POTUS just tossing out obviously false information. It's bizzare and hard to accept in the first place. There's emotion that's opposed to the truth in this case. Cut the ties to the facts, and emotion can do what it wants to do — frank

I think that's about how it works. Though there are other features of it.

If you successfully control what discourse is about, you can leave relevant bits out of it. An example with Trump, the kids in cages on the border and ICE are thrown at his feet, but Obama's presidency started it. This gets taken out of the context of "Trump and Obama actually treated migrants in similar way" and put into the context of defending Trump through tu quoque.

Zizek has a good example about climate change and recycling, people can go apeshit at you if you've not put rubbish in the right bins - doing your bit to save the climate - but they don't go apeshit at industrial agriculture, petrochemical and oil CEOs. It's like "do your recycling!" suffices, because everyone has to do their part. Framing the whole thing in terms of individual actions instead of systemic interventions (Video from climate scientist on sensible systemic interventions).

There's also a time lapse involved in uptaking belief from misinformation and framing; it's not like a skeptical person immediately goes "I believe this!" to propaganda or misinformation, the effect occurs when heuristically searching for available information to interpret (in a broad sense including gut emotional response and snap judgements) a claim or situation, or in making a decision. If you ask pretty much anyone for their immediate mental image of "terrorist", they (and I) imagine some young Muslim bloke; that's the image in my head. It doesn't matter that much that the image is false, and acts of domestic terrorism are disproportionately committed by young white far right men, the framing effects take their toll.

Welcome to The Philosophy Forum!

Get involved in philosophical discussions about knowledge, truth, language, consciousness, science, politics, religion, logic and mathematics, art, history, and lots more. No ads, no clutter, and very little agreement — just fascinating conversations.

Categories

- Guest category

- Phil. Writing Challenge - June 2025

- The Lounge

- General Philosophy

- Metaphysics & Epistemology

- Philosophy of Mind

- Ethics

- Political Philosophy

- Philosophy of Art

- Logic & Philosophy of Mathematics

- Philosophy of Religion

- Philosophy of Science

- Philosophy of Language

- Interesting Stuff

- Politics and Current Affairs

- Humanities and Social Sciences

- Science and Technology

- Non-English Discussion

- German Discussion

- Spanish Discussion

- Learning Centre

- Resources

- Books and Papers

- Reading groups

- Questions

- Guest Speakers

- David Pearce

- Massimo Pigliucci

- Debates

- Debate Proposals

- Debate Discussion

- Feedback

- Article submissions

- About TPF

- Help

More Discussions

- Other sites we like

- Social media

- Terms of Service

- Sign In

- Created with PlushForums

- © 2026 The Philosophy Forum