-

Ukraine Crisis

Right. Russian troops didn't just go to Ukraine in 2022. They went into Kazakhstan for "peace keeping" after nationwide riots against the Ex-Soviet Boomer leadership led to at least 250 dead, including many members of the security forces. Obviously this deployment wasn't planned though.

The deployment to Belarus, which seems indefinite, was planned ahead of time. The invasion of Ukraine was timed to coincide with sweeping changes to Belarus's laws, eroding what little token rule of law existed. In this sense, the operation was also about securing Belarus long term.

Terrible analogy because, as I've pointed out, even if NATO's actions were one of the more relevant factors in the decision to invade, there are obviously multiple other major factors.

Calculations about NATO may have shifted when Trump lost and was replaced by a less NATO-skeptical US leader, but plenty of other nations still opposed giving membership to Ukraine, so it hardly seemed membership was immanent.

But the big argument against NATO actions being the deciding factor, aside from the fact that Ukrainian membership did not seem likely, is the fact that Russia clearly did not take Western aid to Ukraine to date to be a serious threat. They clearly thought all that aid amounted to a small speed bump on their path to a three day route and conquest of Ukraine.

So, you have a military command who clearly doesn't take aid to Ukraine seriously, but then Russia felt it had to invade because Ukrainian's military, the same one expected to fold almost instantly under Russian attack, had become too powerful?

Let's zoom out here. Why did Russia invade Ukraine in 2014? The main issue was Ukrainian ties to the EU. NATO wasn't signaling it was going to bring Ukraine in any time soon then. The EU, however, was looking more likely to include Ukraine, and offering a route in that direction. This threat to Russia seems way more relevant. EU membership was always more likely than NATO membership, and was gaining steam.

EU membership is probably more of an existential threat for Russia long term. It will mean a huge stream of aid and technical assistance for Ukraine. It will also mean more trade with the West.

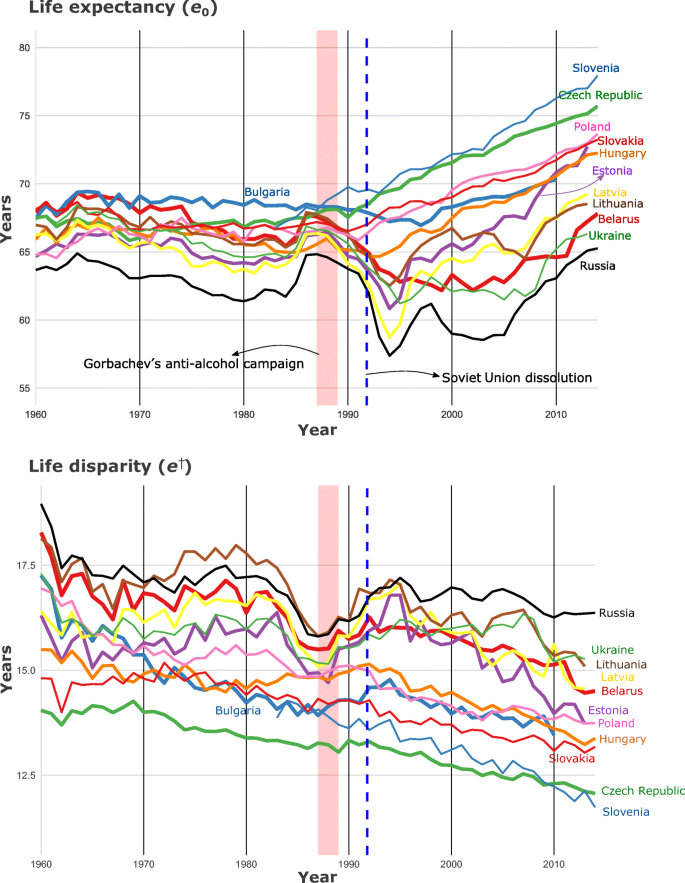

Eastern European countries that have entered the EU have seen far better growth than ones that have stayed more aligned with Russia. If Ukraine followed a similar trajectory, it would begin to experience a large uptick in growth and standards of living. Millions of Russians have family in Ukraine. Such a shift would be a powerful reminder of how poorly the Russian system is performing in terms of offering economic opportunity and mobility.

It'd be a more salient headline for the public than some of the others likely to come, such as Kazakhstan surpassing Russia in per capita GDP (China has already done so), or the former Warsaw Pact countries sans Germany surpassing its economy. -

Ukraine Crisis

NATO stepping up rhetoric vis-á-vis Ukraine's desire to join it was a predictable outcome of the Russian decision to invade Ukraine in 2014. "NATO will threaten to admit Ukraine, but then not actually do anything about it because they are decadent and weak," was the official Russian line then, and in the 8 years since. And indeed, NATO didn't allow Ukraine to join after the invasion, or at any point during 8 years of conflict. Point being, nothing you're quoting is remotely new, and so it isn't a very good explanation for the decision to invade.

In terms of more relevant events, I would look at Belarus and the Central Asian states. Belarus recently had massive protests to oust Lukashanko that required serious repression to beat back. As the world focused on the invasion of Ukraine, Belarus's ruling party rammed through a host of constitutional changes. These were based on changes Putin made to Russia's constitution, and include provisions such as acknowledging a special relationship with Russia in the constitution, and allowing Lukashanko to stay in power essentially for life.

The other major change was to allow a garrison of Russian soldiers to stay permanently in Belarus. This is widely seen as a way for Lukashanko to get around the unreliability of his own armed forces and for Putin to ensure an ally can't be removed.

Meanwhile, virtually every one of the former Soviet Central Asian states has had an uncharacteristically large protest movement/unrest since 2019. Aside from unrest targeting the Russian aligned elite, and the younger generation's increasing frustration with a Russia-alligned economic model that has failed to deliver growth, there is a pivot to China by these states, which represents a growing market for exports, a growing source of investment (e.g., Belt and Road), and a much stronger model of growth.

What changed recently wasn't NATO posture towards Ukraine, but circumstances in all of Russia's satalites. The ones in Europe look to the EU as a more prosperous system. The ones in Asia look to China. This seems more like a poorly thought out plan to reestablish Russian dominance in these satalites, not to deal with NATO. This was supposed to be a rapid drive into Kyiv demonstrating Russia's revived status as a major conventional power.

It is clear from the actions of the Russian military that the leadership did not actually consider that NATO aid to Ukraine would result in any significant resistance. Russia's own invasion plan and lack of any plans for dealing with strong resistance belies the idea that they thought Ukraine was actually becoming some sort of spearhead/garrison state. They very clearly thought it could be routed in 72 hours.

So, they were baited into an invasion by the growing threat of a country they thought they could easily route with almost no losses?

Politics in Russian satalites and domestic Russian politics appear to have driven the decision, not static NATO posturing.

Of course Russian messaging now focuses on NATO. How else can they explain the Russian military's loss around Kyiv and their inability to seize Mariupol, right across the border? The claim that they are somehow fighting all of NATO, who also must have started the war, is about saving face over their terrible performance. Had the war gone as planned, the messaging would be all about NATO's irrelevance and decadence, similar to 2014.

But now that Russian modernization efforts have proven to be a failure, NATO giving Ukraine 0.032% of its annual defense budget, while still withholding most high end equipment, actually means Russia is fighting a war with NATO right now. It has to mean that, else how can they explain how their brilliant leader managed such a collosal disaster? -

Ukraine Crisis

Comparably huge for the Black Sea fleet I guess. That or journalists not knowing what they are talking about most of the time. A ship with a 500 man crew sounds huge if you know massive oil tankers run with 20-30 people.

Could be the real story. Could be made up to show that the Ukrainian-made Neptune works as a way to deter future amphibious assaults. They did just get Harpoon missiles from the UK, although those are fairly antiquated, so the Neptune might be more likely.

I had seen the ship discussed by hobbiest sites before, with the main point being "it would absolutely suck to be a damage control team on a ship with that much ordinance packed in." You can do plenty of things to prevent missiles from going off in a fire, but nothing is fool proof. This seems like part of the issue. The much smaller Israeli Hanit was hit by a C-802 and sailed back to port ok. This seems like a cook off issue.

I don't know why Moscow thinks an accident sounds better. They could at least blame it on dastardly sabotage. It doesn't look good that their ships start going up in flames on their own as soon as they start using them, or that depots of crucial supplies on their side of the border "randomly" explode.

The Neptune is a Ukrainian built system. It wasn't used previously because they were being held in reserve in case of any amphibious landing attempts, likely around Odessa. Now that Ukraine has halted and reversed the Russian advance towards Odessa and is making gains in setting up the recapture of Kherson, they are more free to use the weapons. Also, British Harpoons mean they have more ordinance to use.

They were deployed in this case because a high value target stupidly sailed into the range of the system after engaging in a highly predictable patrol path for weeks, making the attack easy to plan.

Fair enough. I thought that was in reference to the rampant denialism of Russia's various 20th century genocides of ethnic minorities. Certainly, Ukraine has plenty of reasons for historical grievances, and Putin publishing a paper on how their ethnicity doesn't exist in the run up to the war doesn't help with fears of ethnic cleansing on their side.

I agree that the mass executions of civilians, rapes, and looting reported don't seem organized, and aren't on a scale consistent with the term genocide. It's more indicative of terrible discipline, maybe ethnically motivated in the case of some units, but that's impossible to say.

We'll see what happens if Russia keeps any land with large Ukrainian populations. I personally wouldn't be shocked by mass deportations as a means to "sure up" areas of control. Rhetorically, they've drifted in that direction, with the moniker of "holhols" for Ukrainians as a mocking reference to the genocide. The term predates Stalin as a slur, and so has the added benefit of not being too on the nose about the whole "genocide thing that never really happened." -

Ukraine Crisis

What elements of the Hodolomor, during which the USSR killed 3-5 million Ukrainians, somehow make it not genocide?

You can't exactly call it "accidental" when you requisition all the food, including the seed for future harvests, and burn the infrastructure to boot. Or when you pass a law against collecting left over grain scraps in the fields, so that any remaining calories in the area have to rot on the ground, and further blacklist areas that show any resistance by sealing them off from any ability to trade for food.

It also looks suspicious when ethnic Russians are 10% of the population at the outset of the famine and represent a tiny number of the fatalities, while you also move to settle Russians across depopulated areas and somehow have food for them.

Furthermore, moving 500,000+ people across Asia for slave labor and not providing for shelter from the elements, resulting in high fatality rates, and doing this directly in response to fears of nationalist sentiment looks like genocide too. So does massacuring Ukrainian intellectuals and artists and destroying cultural artifacts at the same time that you forcibly starve millions, while you simultaneously move to settle the dominant ethnic group in their land, and then begin reeducation efforts to tell Ukrainians that their ethnicity never existed.

But hey, I guess if you wait 60 years for your archives to begin to open and run a totalitarian state, you can convince people of a lot of things...

That Putin also denies the Hodolomor and the genocide of Poles under Stalin (does shooting 100,000+ people based on their ethnicity count?), "mourns," the passing of the USSR, or that Russians consistently vote for Stalin in polls for the most extraordinary leader in their country's history, says plenty about why relation with Ukraine, Poland, etc. are the way they are. -

Ukraine Crisis

Sure, there are worries about escalation. The alternative is for the US not to live up to its security guarantees to Ukraine, which also risks nuclear war. Because if the US doesn't live up to its obligations in Europe, Asian nations are likely to think they won't fulfill their obligations there. This could have the effect of making a Chinese attempt to seize Taiwan or other territorial claims more likely. It would also make China's neighbors, four of whom are technologically advanced states capable of rapidly developing nuclear weapons, more likely to develop such a deterrent.

Non-proliferation research/efforts have long centered around the idea that conflicts are more likely when there are more parties involved, and so stopping nations from developing nuclear weapons, even stable allies like Korea and Japan, is a top priority.

Which gets us back to why the US had obligations to Ukraine in the first place. When the USSR collapsed, Ukraine was left with a nuclear arsenal. Obviously, Russia wanted the weapons back as well. Internal relations with Ukraine have not always been all that great, you know, the whole millions of people killed in a genocide in living memory, mass enslavement and deportations, etc.

To facilitate a reduction of the number of countries with nuclear weapons, the US made security promises to Ukraine in exchange for them delivering the weapons to Moscow, something Moscow agreed to.

With that in mind, how does everything said about reckless escalation not apply to Russia attacking a country that the US has openly promised to protect in coordination with Russia?

Aid is a calculated risk. Military aid to Taiwan, Korea, and Japan also angers China, a nuclear power. US involvement in any conventional skirmish would also increase the risks involved. However, it is totally unclear if the US saying: "sorry guys, you're on your own," would be less risky, since it would likely have resulted in those Asian nations fielding their own nuclear weapons, and Ukraine never having given up theirs. -

Ukraine Crisis

That talks about Russia's military success in Ukraine.

It talks about Russia's inability to mobilize combat power and the problem of run away morale issues and casualties making most battalion tactical groups unusable for serious offensive maneuvers out east. I don't know if I'd call that success.

An assessment of Russian capabilities from last week, based on Russia's actual effectiveness in a war, seems a lot more relevant than assessments from 2016. Of course Russia experts were publishing on Russia's major efforts to modernize its armed forces after the Georgia debacle. Performance in Syria, while limited, was impressive at first (at least compared to low expectations).

The open question was always if the modernization efforts would root out enough corruption and nepotism to be effective. Russia's invasion stalling out less than 100 miles from the border due to bad logistics, the suicidal air assaults and VDV deployments without proper SEAD and follow up ground support, the total inability to bring down the Ukrainian AA network or airforce, and the total absence of anything resembling sustained, complex air operations answered a lot of those open questions.

We've now seen the military in action. We've seen the cheap Chinese tires mounted on $13 million AA systems leading to ruined rims and abandoned systems. We've seen them using completely open coms that civilians around the world can listen too because they lack a secure coms network. We've seen tank companies parked in big squares within Ukranian artillery range because crews need to shout at each other due to bad coms (and the consequences of this as Ukrainian drones range and video the tanks, and shells begin falling). We've seen a slew of general officers and colonels killed because they have to go to the front to talk to their men due to bad comms. We've seen successful Ukrainian air raids into Russia proper to hit supplies in non-stealth, last-gen rotary wing aircraft, due to inept AA preparedness. The assessment is now that NATO would have a relatively easy time dismantling Russia's conventional forces in defensive operations. It all looks bad.

Vis-á-vis the nuclear threat, if you like Chatam House, look there:

It is always possible – although assumed to be highly unlikely – that Putin may decide to launch a long-range ballistic missile attack against the US, but he knows – as do all his officials – that this would be the end of Russia.

https://www.chathamhouse.org/2022/03/how-likely-use-nuclear-weapons-russia

The US has been practicing nuclear supremacy against Russia since the fall of the USSR. It has a large nuclear arsenal in close proximity for taking out Russia's nuclear assets on the ground. It has successful missile defense systems (how successful is unclear, the main Alaskan interceptors have a 99+% chance of shooting down an ICBM when four are assigned to a target, but the portable missiles in Europe have much less clear effectiveness. We only know they can shoot down ICBMs because Trump, IMO stupidly, decided to do a public test to show off). But even if US missile defense didn't exist, it would still be in a solid position to hit launch sites if it saw Russian assets moving on satalites.

Everyone takes the risk of nuclear war very seriously. No one wants to count on relatively untested defense measures. At the same time, there is definitely an assessment that Putin is not going to give a suicidal order to start a nuclear war (and that people wouldn't obey it even if given) over Russia getting control Ukraine (the main benefits of which are prestige and control of gas fields and pipelines, whose profits will mostly be funneled to oligarchs anyhow.) -

Ukraine CrisisOn the topic of competence, at least Russia has avoided cartoonish blunders.

Their flag ship is an older, but heavily upgraded missile carrier specced out for area denial. It packs a ton of fire power. However, it does so at the cost of cramming 64 S-300 missiles and a further 16 P-500 cruise missiles, plus a few dozen short range SAMs, into a small area. It might seem like a bit too much high explosive in one place it you're one of the ship's 500 crewmen.

The cruise missiles seem pretty dear to Russia right now, given their sparing use of them despite the fact that they represent their safest option for taking out NATO arms shipments out in western Ukraine. Meanwhile, the area denial capabilities of the S-300 make them ship quite valuable.

So, it would be really silly to have it patrolling the Ukrainian coast within anti-ship missile range for no apparent reason. It is possibly providing AA around Kherson, AA against what is unclear. In any case, you wouldn't want to be just sailing it on a predictable loop for days on end because there is nothing for it to do, but it has to look busy. And even if you did this, once open sources were documenting it and making fun of the path, you wouldn't keep doing it, and go on sailing right into range of an anti-ship missile that in turn ignites all the high explosive you've got on board, right?

Oh...

Gotta have some way to funnel tax payer cash to the contractors. -

Ukraine CrisisOn a less political note, is Russia's performance in this conflict, particularly the heavy losses for armored units just inductive of the quality > quantity tend of modern warfare and the need to upgrade hardware continuously, or is it the death knell of the main battle tank?

I've seen quality analysis on both sides. That the US is throwing billions into M1s doesn't say much; it drops money into bad hardware all the time. Case in point, turning their rear echelon mobility vehicle, the Humvee into a $250,000 gas guzzling, quasi-IFV, mound of armor that now sucked at all its roles, using a logic of "more casualties = let's weld even more armor on to it! Why do drivers need to be able to see?"

The new M1 had the ability to stream in overhead drone video, intercept incoming missiles and drones, detect when it is being painted by lasers, radar, etc. However, given the enhanced recon drones offer, and the advances in smart munitions, it seems like a survivable tank-like vehicle focusing on guided mortars and rockets might make more sense. And is a tank still a tank if it loses the main gun and turret?

I guess the next phase of the war might point one way or the other. Infantry anti-tank weapons and artillery seem to be working well for Ukraine on defense, but the argument for tanks is also on your ability to push on the adversary's positions, something infantry anti-tank weapons are not ideal for. This seems to explain the shift in aid to more IFVs, APCs, and tanks (videos from Poland shoe trainloads headed east). -

Ukraine Crisis

Or more recently, Libya. Given modern war's tendency to send millions of people fleeing into Europe, and the political meltdowns this has caused, NATO, or at least EU nations, do seem to have something of a stake in preventing dire situations from cropping up near their borders (at the level of grand strategy at least). But, clearly they have a problem of not wanting to commit the resources needed to actually ensure stability in these areas; it's more a gropping attempt to find a path towards the lesser evils (in terms of humanitarian optics and the political/economic realities of migrant flows).

Russia/Belarus's tactic of giving migrants passage to EU borders to try to cause a rift in the EU goes along with this new reality.

As for Chinese missiles in Mexico, something like this has already happened. The Soviet's problem in Cuba was that they lacked the blue water navy to challenge a US blockade, dooming the project. China would face a similar problem for the foreseeable future.

China could try for such an arrangement, but it seems like that would just be a good way for them to drastically increase the risk of Taiwan, South Korea, or Japan developing their own deterrent to deal with a China taking an aggressive nuclear posture towards their main ally. Not to mention, China would have to somehow offer benefits that outweigh the costs of US sanctions, which is likely to be a very high cost, one they could only realistically support by pouring aid into a small country. Mexico, with its huge trade relations with the US, obviously isn't going to work, not to mention that their large population means the aid to make the deal a net benefit to them would be outside even China's (or the US's) price range. Maybe Venezuela would work, but there you have to worry about internal stability, and now you're far enough out that US missile defense becomes an issue again.

All that is sort of besides the point. Did Russia invade Ukraine in 2022 because the US set up missiles in Europe decades ago to counter the USSR?

The invasion and threatening nuclear war is the best thing Russia could have done to ensure those missiles stay there. The US isn't investing in new missiles to target Russia. Quite the opposite. The big investments have been in extending missile defense to Europe.

Russia has reason to be concerned there, in that the Aegis Ashore network has thousands of launchers capable of using the SM-3 Block IIA, which can demonstrably down ICBMs, and which can field a host of options for taking out intermediate range missiles at all parts of their flight path (by contrast, the high end ICBM Interceptors up in Alaska aren't redeployable easily, and have cost multiple times Russia's annual defense budget). But the irony here is that this would not be as much of a threat to Russia is they didn't need to keep pounding the table and threatening to nuke civilian targets across Europe when their adventurism goes bad.

Were they developing a modern economy, instead of now falling behind China in per capita GDP, and had they not hemorrhaged over five million mostly young, mostly educated young people to emigration (mostly to the evil West, mostly, in their own words, because they were fleeing Russia's backwardsness) even before this war... maybe they could just develop new hardware to deal with the issue, instead of counting on 1980s weapons to keep them a "superpower."

The problem for Russia boosters is that their actions are absolute shit even from a realpolitik, grand strategy perspective. They are the actions of an insular, incestuous leadership class that is high on their own propaganda and disconnected from reality.

Not that it'll be any time soon, but from a grand strategy perspective, there is also the issue that their entire economy will go truly up in smoke if any sort of reliable non-fossil fuel energy source is developed if they don't develop other industries. ITER in France, a tocamak with a 10:1 return on power, not Aegis or Minutemen, is Russia's biggest threat on its current path. -

Ukraine Crisis

I'm not being right lipped about assessments. Here is one for the current conflict I quoted at length earlier, as opposed to say, a blurb about Syria, where Russia faced a band of infighting militias that were also getting attacked by Turkey, the US, the Peshmerga, and the Gulf States, while getting considerable support for its side from Hezbollah and Iran.

https://www.understandingwar.org/backgrounder/russian-offensive-campaign-assessment-april-9 -

Ukraine Crisis

You're not helping your case by bringing up an absolute meme site for "military rankings." GFP just shoves arsenals that exist on paper into a spreadsheet and takes no account at all about actual capabilities. E.g.: Are reserves actually trained and able to be mobilized quickly? Is mothballed hardware kept useable or up to date? Can the country supply its own munitions? Can they keep an active, encrypted coms network up? Is investment on paper being embezzled? Does it have a meritocratic leadership system? And most notably here, it takes absolutely zero account of logistics.

For example, an M1 tank can't be judged as a "unit of firepower." A division of the things can burn through half a million gallons of fuel a day. Without well drilled fuel teams and specialized equipment, they become a hell of a lot less effective. Without fuel in general, they become useless outside of dug in positions. There is a reason the US Army mothballed a ton of tanks, and instead is sinking almost a billion into upgrading just 70 tanks.

Outdated hardware is how you end up with all these videos of Russian tank columns spotted by drones getting lit up by artillery and being reduced to smoldering husks without ever engaging the adversary.

Far better to do more with less and have something like the SEPv3, where you have systems to shoot down incoming anti-tank missiles and drones, sensors for knowing if you're subject to laser targeting, and video feeds for drone recon elements that can use AI to locate potential ambush teams so you can light them up from outside line of sight.

More is better is definitely not proving true in modern warfare. This has been true at least since Mole Cricket, where Syria scrambled 100 MiGs only to see 86 shot down, along with more than two dozen SAM batteries destroyed, while not taking down a single Israeli fighter. A numbers based methodology is, frankly, hot garbage. The number of useful tanks is the number you can keep fueled and updated, not the number you have in storage.

Do we really think Syria had one of the most powerful militaries in the world in the run up to their civil war? Egypt has a more 28% more powerful military than Israel and one more powerful than Turkey? Why?

Because the US dumps hardware there as a pass through to defense contractors and because it has a huge number of soldiers due to the military functioning as a gigantic jobs and social stability program? Turkey has been developing its own hardware it can supply itself, including drones that have caused Russia a ton of pain, and bested Russia in a proxy war in 2020 (a conflict that is heating back up in light of perceived Russian weakness). Israel regularly makes its own upgrades to US hardware that the US ends up buying due to superior functionality, and has been involved in no shortage of conflicts the past 20 years. Outside the Sinai insurgency, which is quite small scale, Egypt's institutional experience is in machine gunning groups of protestors and starving Palestinians of supplies.

Not to mention that their methodology would have made Iraq one of the most powerful nations in the world before both Gulf wars.

So war or submission are your only options. Turns out you did learn diplomacy from a fucking pack of football hooligans after all.

Yes, war and submission are the only options when Russian tanks keep rolling into neighboring countries. You know, sort of like they have in Ukraine and Georgia, or Moldova before that(where they still haven't left), or their repeated violent repressions of Eastern Europe's attempts to free themselves from the Russian yolk from 1945 on. -

Ukraine CrisisSo yes, perhaps he'll be happy if he has that firm land bridge to Crimea. But then why not push Ukraine out of the Black Sea and have Odessa too?

Their inability to get across the Southern Bug back when they had fresh forces, the heavy casualties and counter attacks they faced there, and the fact that the Neptunes in Odessa make using an amphibious assault likely a suicide mission that will result in an unambiguous mass fatality event.

I imagine it would be pretty hard to get soldiers, no matter how loyal, to countenance even getting on a ship when it's going to have next to no missile defense and going to be facing relatively long range, modern US and UK anti-ship missiles. -

Ukraine Crisis

Russia

Global superpower

Might want to rethink that. They couldn't support their advances more than 40 miles from their border. This isn't 1975.

Given Soviet era helicopters have been able to make it through the vaunted Russian AA to hit strategic targets in urban areas across the border, I don't know if this is exactly a sea change. NATO had plenty of leverage to keep Ukraine from using the missiles on Russia. They're clearly getting fed extremely accurate intelligence. The US basically published Russia's exact invasion plan before it started as a way to dissuade them, and AWACS are likely painting every Russian aircraft as it takes off. Hence the case of the missing Russian airforce and all the MANPAD shootdowns.reach into their territory

Also, it'd still be a good deal less than what Russia did in Vietnam to counter the US, or what China did in Korea. Russia will kick and scream about their nukes regardless because it's now the only thing keeping them relevant, but given the state of the rest of their military, and given the US now had ship portable missiles that have successfully shot down ICBMs (and which work with the Aegis on-land launchers they have all over Europe), I don't think they think they are in a particularly good place to use that threat.

Also, what are you going to do, let Russia invade all of their neighbors because they will threaten to attack civilians with nukes every time they lose a war?

It was Putin's choice to reenact the Winter War for his own ego. -

Ukraine Crisis

They could respond. Aid to Ukraine so far is mostly only usable defensively. Anti-tank missile systems are far less effective when used offensively. NATO could supply systems that would make seizing Crimea more likely, or at least enough anti-ship missiles to make supplying it by sea untenable.

Poland's attempted donation of MiGs for example, or predator drones. Hell, basic training time for the F-15 for experienced pilots that would allow them to use stand off weapons to down Russian aircraft is even feasible. There is a lot more the West could do to ruin Russia's day, even without supplying manpower.

Giving Ukraine longer range missiles and technical assistance using them would make these multi-mile long convoys into death traps and greatly reduce the likelyhood that Russia can get its new offensive rolling. They have pretty garbage anti-missile defense. The AA in general isn't looking to hot either given the Ukrainian raid on Belgorod, the continued action of the Ukrainian air force, or the fact that Israel flew 3,000+ sorties through their AA network in Syria in just one year without a single loss, hitting with impunity, often with no evidence of early warning reaching targets (the F-35 seems to be doing its job on some of these).

Even aside from that, they could give Ukraine more armor. Their use of guided artillery is absolutely pounding Russian tank columns:

https://youtu.be/PQ3R6bEB5RE

https://youtu.be/icSJPqkzupI

This won't work near as well on the offensive, since Russian tanks (should) be digging in instead of parking as a massive target for artillery. We haven't seen that to date, but you have to assume someone competent would start a change in tactics.

In order to retake Kherson and Mariupol, they'll need more IFVs and tanks, which NATO could provide on larger numbers. That they haven't I think beliefs fears about Russia doubling down on the invasion of they begin losing territory. However, their actions against civilians in areas they've held is probably tipping support to send more armored vehicles. -

Ukraine CrisisPoland is deploying top tier banter against Russia in Ukraine.

a caged bird summary

Maybe they can call on the Holy Mother to lead another Miracle of the Vistula, only a bit further East this time. If Russia hates NATO, imagine how much they'd hate a renewed Intermarium... You'd think ideas like that were in the graveyard of history, but given how Putin has so rapidly isolated Russia and made it reliant on China, it seems the "Tartar Yolk" might be making a comeback too. Maybe the Balkan nations would find it easier to negotiate in the EU with Germany and France under a unified, ceremonial Hapsburg state? I hear there is a surviving heir. It would make geography easier...

If I was Ukraine and really wanted to get under Russia's history obsessed skin, I'd rename the country something like "The Federal Republic of Kyivian Rus." -

Ukraine Crisis

Yeah, his photos in combat gear "near the fighting" were sourced to inside Russia. Pretty hilarious.

NATO had held back on higher end aid. My guess is that chemical weapons would lead to more of that being released, e.g., fighters from Poland that have been offered, more drones (the Turkish drones have caused Russia no amount of grief and the US certainly could offer vastly superior drones in large numbers, but this hasn't happened because it would likely seem like too much of a provocation, plus they don't want any tech leaking), potentially more tanks, more long range missile systems.

I think there is a fear here that releasing too much aid might lead to very negative outcomes. If Russia's reorganized push with what are generally considered to now be mostly low combat effectiveness soldiers (low morale, large number of casualties, large amount of hardware lost) collapses, more weaponry could encourage Ukraine to push into areas lost in 2014.

This could have the opposite effect of what NATO wants if Putin feels his legitimacy is threatened by these losses. Russia certainly has the capability to continue the war long term (increased conscription, etc). This might result in political chaos in Moscow, but that's not really something NATO wants, or it could lead to even more unhinged attacks on civilian targets.

Kind of a balancing act.

Interesting update:

The Russian military is attempting to generate sufficient combat power to seize and hold the portions of Donetsk and Luhansk oblasts that it does not currently control after it completes the seizure of Mariupol. There are good reasons to question the Russian armed forces’ ability to do so and their ability to use regenerated combat power effectively despite a reported simplification of the Russian command structure. This update, which we offer on a day without significant military operations on which to report, attempts to explain and unpack some of the complexities involved in making these assessments.

We discuss below some instances in which American and other officials have presented information in ways that may inadvertently exaggerate Russian combat capability. We do not in any way mean to suggest that such exaggeration is intentional. Presenting an accurate picture of a military’s combat power is inherently difficult. Doing so from classified assessments in an unclassified environment is especially so. We respect the efforts and integrity of US and allied officials trying to help the general public understand this conflict and offer the comments below in hopes of helping them in that task.

We assess that the Russian military will struggle to amass a large and combat-capable force of mechanized units to operate in Donbas within the next few months. Russia will likely continue to throw badly damaged and partially reconstituted units piecemeal into offensive operations that make limited gains at great cost.[1] The Russians likely will make gains nevertheless and may either trap or wear down Ukrainian forces enough to secure much of Donetsk and Luhansk Oblasts, but it is at least equally likely that these Russian offensives will culminate before reaching their objectives, as similar Russian operations have done.

The US Department of Defense (DoD) reported on April 8 that the Russian armed forces have lost 15-20 percent of the “combat power” they had arrayed against Ukraine before the invasion.[2] This statement is somewhat (unintentionally) misleading because it uses the phrase “combat power” loosely. The US DoD statements about Russian “combat power” appear to refer to the percentage of troops mobilized for the invasion that are still in principle available for fighting—that is, that are still alive, not badly injured, and with their units. But “combat power” means much more than that. US Army doctrine defines combat power as “the total means of destructive, constructive, and information capabilities that a military unit or formation can apply at a given time.”[3] It identifies eight elements of combat power: “leadership, information, command and control, movement and maneuver, intelligence, fires, sustainment, and protection.”[4] This doctrinal definition obviously encompasses much more than the total number of troops physically present with units and is one of the keys to understanding why Russian forces have performed so poorly in this war despite their large numerical advantage. It is also the key to understanding the evolving next phase of the war.

US DoD statements that Russia retains 80-85 percent of its original mobilized combat power unintentionally exaggerate the Russian military’s current capabilities to fight. Such statements taken in isolation are inherently ambiguous, for one thing. They could mean that 80-85 percent of the Russian units originally mobilized to fight in Ukraine remain intact and ready for action while 15-20 percent have been destroyed. Were that the case, Russia would have tremendous remaining combat power to hurl against Ukraine. Or, they could mean that all the Russian units mobilized to invade Ukraine have each suffered 15-20 percent casualties, which would point to a greatly decreased Russian offensive capacity, as such casualty levels severely degrade the effectiveness of most military units. The reality, as DoD briefers and other evidence make clear, is more complicated, and paints a grim picture for Russian commanders contemplating renewing major offensive operations.

The dozens of Russian battalion tactical groups (BTGs) that retreated from around Kyiv likely possess combat power that is a fraction of what the numbers of units or total numbers of personnel with those units would suggest. Russian units that have fought in Ukraine have taken fearful damage.[5] As the US DoD official noted on April 8, “We've seen indications of some units that are literally, for all intents and purposes, eradicated. There's just nothing left of the BTG except a handful of troops, and maybe a small number of vehicles, and they're going to have to be reconstituted or reapplied to others. We've seen others that are, you know, down 30 percent manpower.”[6] Units with such levels of losses are combat ineffective—they have essentially zero combat power. A combination of anecdotal evidence and generalized statements such as these from US and other NATO defense officials indicates that most of the Russian forces withdrawn from the immediate environs of Kyiv likely fall into the category of units that will remain combat ineffective until they have been reconstituted.

Reconstituting these units to restore any notable fraction of their nominal power would take months. The Russian military would have to incorporate new soldiers bringing the units back up toward full strength and then allow those soldiers time to integrate into the units. It would also have to allow those units to conduct some unit training, because a unit is more than the sum of individual soldiers and vehicles. The combat power of a unit results in no small part from its ability to operate as a coherent whole rather than a group of individuals. It takes time even for well-trained professional soldiers to learn how to fight together, and Russian soldiers are far from well-trained. The unit would also have to replace lost and damaged vehicles and repair those that are reparable. The unit’s personnel would need time to regain their morale and will to fight, both badly damaged by the humiliation of defeat and the stress and emotional damage of the losses they suffered. These processes take a long time. They cannot be accomplished in a few weeks, let alone the few days the Russian command appears willing to grant. Russian forces withdrawn from around Kyiv and going back to fight in Donbas in the next few weeks, therefore, will not have been reconstituted. At best, they will have been patched up and filled out not with fresh soldiers but with soldiers drawn from other battered and demoralized units. A battalion’s worth of such troops will not have a battalion’s worth of combat power.

The Russian armed forces likely have few or no full-strength units in reserve to deploy to fight in Ukraine because of a flawed mobilization scheme that cannot be fixed in the course of a short war. The Russians did not deploy full regiments and brigades to invade Ukraine—with few exceptions as we have previously noted. They instead drew individual battalions from many different regiments and brigades across their entire force. We have identified elements of almost every single brigade or regiment in the Russian Army, Airborne Troops, and Naval Infantry involved in fighting in Ukraine already. The decision to form composite organizations drawn from individual battalions thrown together into ad hoc formations degraded the performance of those units, as we have discussed in earlier reports.[7] It has also committed the Russian military to replicating that mistake for the duration of this conflict, because there are likely few or no intact regiments or brigades remaining in the Russian Army, Airborne Forces, or Naval Infantry. The Russians have no choice but to continue throwing individual battalions together into ad hoc formations until they have rebuilt entire regiments and brigades, a process that will likely take years.

ISW has been spot on about the course of the war so far. They now see good conditions for counter attacks to retake Kherson, while the biggest threat will come from the axis further east.

Notably though, Russia kept its official conscription figures fairly normal, which was a good sign for peace, but now apparently they are doing behind the scenes conscription, including on the spot conscription at road blocks.

Russia has a 20-24 population of 3.45 million, versus 9.5 million for the US. It boggles the mind to think that the leadership is so into their groupthink that they think they can hide a major war with 15,000+ KIA and 40,000 casualties from the public long term, especially if they are using older reservists and more conscription. It's the equivalent of six times all the fatalities from Iraq and Afghanistan over twenty years occuring in just two months already. -

Ukraine Crisis

This is a pretty selective memory of the Bush era. News programs constantly called Bush out on the duplicity involved in making the case for the Iraq War. There was a major investigation into the torture program and attempts to try those responsible. Go watch old episodes of the Daily Show circa 2005-2008; it is wall to wall coverage of the Bush administration's infamies.

A number of Americans have been sentenced to long prison terms for improper use of force in Iraq and Afghanistan. In the most aggregious cases, US prosecutors sought to execute US soldiers for these crimes (e.g., https://en.m.wikipedia.org/wiki/Mahmudiyah_rape_and_killings). Which is not to say the system is perfect, far from it, but it's ridiculous to paint the media as giving a blanket pardon to US military actions. Indeed, they often go too far the other way, removing all nuance and turning the leadership into cartoon villains.

Notably, the New York Times ran a full page ad of General Patreus as "General Betray us," but wasn't shut down, and Noam Chomsky hasn't died of polonium poisoning.

Maybe Russia wouldn't be treated as an enemy if they hadn't launched multiple invasions of their neighbors over the last few decades? -

Esse Est Percipi

It seems that Berkeley has replaced the dualism between material and perception with a more ad hoc dualism between mortal perception and God's perceptions.

Yeah, that's a fair criticism. I've always found Kant's analysis of, in his words, phenomenal/noumenal dualism much more interesting.

He tows a fine, arguably at times incoherent, line between objective idealism and subjectivism, but the insights about how conciousness constructs out world are still brilliant, even today. They also have been surprisingly well confirmed by modern cognitive neuroscience.

This seems surprising at first, but is less so when you realize his categories of cognition map to logical distinctions which themselves sit at the center of how we think the world works based on the physical sciences. The connection between the logical and the actual is, on the one hand, unsurprising, evolution should have equipped us with a sense of "how things work," but on the other hand is one of the 'deeper' findings in the physical sciences from my perspective. -

Rasmussen’s Paradox that Nothing Exists

Any such assertions are clearly due to a lack of understanding of The Philosopher on the part of the student. Haven't you read your scholastic texts!?

That'll be 20 Hail Marys and a five-day bread fast. -

Rasmussen’s Paradox that Nothing Exists

As I mentioned earlier in this thread, I haven't really dug into symmetry. I've been exposed to the concept in physics books, history of science, etc. but haven't really grappled with the mathematics. That said, if I recall my lectures and texts correctly, you are able to "rotate" and "flip" these matrices (e.g., Pauli matrices for spin). So, you get lines like: "An interesting property of spin 1⁄2 particles is that they must be rotated by an angle of 4π in order to return to their original configuration."

I was always told that this area of physics had some of the most obstruse, difficult mathematics in the whole field and so have always been scared away from actually working through them. I'll stick to Feynman diagrams, causal diagrams, and differential equations thank you very much :groan: .

I thought I was pretty good at math and just had gaps from going to a terrible, collapsing school system growing up because I learned a good deal of complex statistical methods and taught myself to code in several languages. Then I tried to jump into this Teaching Company course on linear algebra, without all the prerequisites (their calculus classes are accessible), and realized there are some things I'll probably just never get and have to accept on good faith. -

Esse Est Percipi

Huh? I am?

Yes. The objections you are making only apply to certain types of idealism, namely those forms of subjective idealism embracing epistemological relativism or solipsism. These are fairly uncommon because writing them is self-defeating (if you don't think your audience exists, why bother?)

In philosophy, the term idealism identifies and describes metaphysical perspectives which assert that reality is indistinguishable and inseparable from human perception and understanding; that reality is a mental construct closely connected to ideas.[1] Idealist perspectives are in two categories: (i) Subjective idealism, which proposes that a material object exists only to the extent that a human being perceives the object; and (ii) Objective idealism, which proposes the existence of an objective consciousness that exists prior to and independently of human consciousness, thus the existence of the object is independent of human perception.

So, in objective idealism, ideas are still ontologically basic, but there is no question about them not being real when you aren't thinking about them.

So, is reality rose tinted or no?

No. Berkeley dedicates much time to illusions and hallucinations because these are the obvious objections to his system. His main point is that the world appears to work according to a set of natural laws (physics, biology, etc.). God gives us these laws for our instruction. While God could make an animal live even while its heart is stopped, he wouldn't do so because the laws are for our edification. The laws of science hold in Berkeley and so we can infer from them how colored glasses work.

I get where you are coming from though. If you take Berkeley as being solipsistic, then the world should be changing, like you say. But here you have to remember that God is at the center of Berkeley. God is omniscient. God perceives all ideas at all times, and so these ideas have definite properties. The problem is partly with Berkeley, who wants to make his clever argument against materialism on purely philosophical grounds, but then ends up pulling God in to avoid the problems his refutation of materialism has created for him in maintaining realism. Later Idealists handled this much better IMO.

God is also extremely involved in allowing basic "physical" interactions to occur at every level in Berkeley.

This set up was not popular. I think it's fair to say Berkeley's critique of materialism had more interest than the specifics of what he replaced it with. -

Esse Est PercipiBy the way, while Berkeley is realist about ideas, he still represents "subjective idealism," in that ideas only exist insomuch as they interact with minds (but they are not within minds as solipsism postulates, see the quotes above in this thread).

Not all idealism is subjective idealism. Kant, by many scholars estimation, represents a sort of blended idealism. The noumena are not in our minds, there is a sort of dualism in Kant. We only see objects as our faculties allow us to. That said, he also denotes how these faculties are, in at least some places, shaped by logical necessity. Thus, the categories of the faculties have an epistemological as opposed to solely psychological status.

Kant's version of idealism is not without some apparent contradiction. In response to this we get forms of "objective idealism," most influentially, Absolute Idealism. People sometimes deeply misunderstand Absolute Idealism as taking Kant to the conclusion that the noumenal doesn't exist. This isn't the right frame. The Absolute encompasses all possibility. It does not contain a subjective/objective split, because it stands above and encompasses both.

Absolute Idealism centers around how universal reason dictates the coming into being of the world, and a real world at that. However, this world is premised on self-positing Spirit, and so there is no ontological divide between what is experienced and the objects of experience, both obtain within the Absolute.

For an excellent breakdown of this, Gary Dorrien's Kantian Reason, Hegelian Spirit is extremely cogent and provided a lucid overview of many points of view in the scholarship on this. It's mostly towards the end of the second chapter of you can snag a copy.

It looks at philosophy through the lens of theology, but it a great overview of the philosophy in its own right. Also a rare book covering German Idealism that is so lucid that the audio version is actually usable, although it still requires a playing it at like .9 speed, rewinding, and pausing a lot. -

SEP re-wrote the article on atheism/agnosticism.

Good distinction here. I fear for the safety of cats if the Taliban were to find out they were all definitionally atheist. -

Esse Est Percipi

I'm certainly not saying that, but that is essentially how it works in Berkeley. Like I said, he is relatively silent on the idea/mind interaction, so it's not totally clear how the mechanics of this work outside of God's meditating role. He does have a section somewhere where he says minds don't take on the attributes of ideas when they are interacting with them (e.g., minds don't become colored when seeing red). There is an idea/spirit dualism here somewhat similar to the physical/mental divide.

It's a fairly incomplete system, partly owing to its age I'd say, since there are similar types of oversights in Locke. Newer systems have the benefit of knowing a few centuries worth of critical questions they need responses to. -

Esse Est Percipi

But for the idealist, there is no such remove between the phenomenal and reality. So, when the rose colored glasses are worn, the idealist is committed to say that reality itself changes. When such a result is arrived at, it is time to discard the theory

This is simply not true. You are conflating realism and idealism as the same things. They aren't. History shows plenty of cases of proto-physicalist anti-realism (ancient religions have this quite often), while many idealist ontologies are realist.

If you read Berkeley or Kastrup you will find realism explicitly stated. You may find the way they ground realism lacking, but it is most certainly there. When you take off the rose colored glasses in Berkeley, the world doesn't change, you just don't have tinted glasses on. Chairs and rocks are real, they just aren't material.

It's not like Sankara, where Maya is actually an illusion. -

This Forum & Physicalism

Yeah, that's sort of where I was headed at first thinking about these things. The problem of parts being traits though is that it seems like objects reduce to fundemental particles, as in your example.

Interestingly, there are forms of realism people have proposed where the only universals/forms are the fundemental particles. I've never seen nominalism of this sort before, but I could see how it would work. Fundemental particles would be the only tropes, and tropes would really just be names for the excitations of quantum fields we observe.

These are pretty neat. The problem they might have for your stand point comes up here:

Each particular thing is unique, in itself, having a thisness all to itself,

Physicists generally claim that fundemental particles do lack haeccity. Lately though, there has been some debate as to how indiscernible particles really are. In some cases, they may not be fully indiscernible, the jury is out.

For the most part though, we are told not to assume that an electron we trapped in a box will remain the same electron when we open the box. Or, another proposed way to look at it is to say there is only one electron. The electron is not affected by time, and so it can be everywhere at once.

For a bit more detail:

French & Redhead’s proof is based on the assumption that when we consider a set of n particles of the same type, any property of the ith particle can be represented by an operator of the form Oi = I(1) ⊗ I(2) ⊗ … ⊗ O(i) ⊗ … ⊗ I(n), where O is a Hermitian operator acting on the single-particle Hilbert space ℋ. Now it is easy to prove that the expectation values of two such operators Oi and Oj calculated for symmetric and antisymmetric states are identical. Similarly, it can be proved that the probabilities of revealing any value of observables of the above type conditional upon any measurement outcome previously revealed are the same for all n particles.

The original paper.

An easier write up .

Now, keep this lack of haecceity in mind and think of how different particles might be seen to function very much like the way letters function in a text (a "T" is always a T; the specific T is meaningless, only its role in a word matters). Words are made up of letters, but words can have properties like "adjective" or "noun." Their traits don't come from their parts. Then, their role as subject or predicate in a sentence is further not derived from their letters, but by their relationship to other words.

So these assumptions are not true at all. The lines and angles are traits. The line and the angle are concepts which are traits of the concept of triangle, and they are also the parts of the triangle.

But then which letter/concept in a word holds the trait "noun?" Which parts can be summed up into the concept "noun?" This property can't just be attributed to the rules of spelling, the way the rules of geometry denote "triangle" from the slope of a triangle's three lines, because random mixes of letters can be proper nouns in fiction novels and we create new words all the time. Additionally, words have a meaning when spoken as well as written, and illiterate people can understand words without the letters that make them up.

Same holds for the trait of "predicate." It seems the traits a word posseses can't just be coming from the parts, no? In this case, a part of a sentence gets its trait from a whole that it is a part of, in the same way eyes have the trait of being organs due to being parts of whole bodies. Even if the components of words are actually "ideas," I don't know how this gets you to nouns being subjects of sentences, as in the property of the word "Jamie" in a sentence like "Jamie is a dog," which features two nouns.

[an object] it is defined by the true propositions made about it

And here is the other big problem with these fundemental systems: fundemental particles can't be triangles, they don't have a color, they can't be circles, they can't be lighter or darker than each other, they can't be translucent, etc. All these properties are emergent.

So, if traits are actually just parts, I'm not sure I see a way for propositions such as "the block Thomas picked up is triangular," can have truth values. Because the block is actually made up of atoms that aren't triangular, and if you say that the triangularity comes from the block's parts, then you are admitting that objects can have traits that their parts lack, and of course "being made of atoms" isn't necissary or sufficient as a cause of being triangular.

Arguably, the block isn't triangular. It's like Mandelbrot's map of the British coastline, which actually has infinite length because you can always measure at finer and finer detail, which will reveal ever smaller irregularities in the coast line that add to its length. So, the edge of the block is actually a roiling landscape of microscopic bumps, not a straight line.

However, this seems like a pretty big blow to propositions. You can get around this problem with Mandelbrot's insight that the same shape has a different number of dimensions when viewed from different perspectives. His example was a ball of string. From very far away, it is a one dimensional point. As you get closer, it becomes a two dimensional line of string. Get closer still, and it is a three dimensional cylindrical entity. Get closer still, to the scale of fundemental particles, and it is now a group of one dimensional points. (The insightful kicker here is that the strong has fractional dimensions at different points along this analysis).

But if you do this, you're back to looking at the traits of the object as a whole, not the parts. -

Is materialism unscientific?

This presupposes that what cannot be empirically verified is non-scientific

Yes, I think that's correct, with a rather large caveat. Plenty of scientific theories are impossible to vet empircally currently. For example, multiple interpretations of quantum mechanics predict identical results. However, ways of testing between some of these have been proposed and more will likely follow in the future. Same sort of thing goes for M theory.

Proposals in science don't have to be falsifiable (e.g., Many Worlds). They do need some sort of connection to the findings of science in those cases though.

Many Worlds is a result of taking the Schrodinger Equation to its logical conclusions. Taking the equation seriously is supported by empirical results that demonstrate its accuracy in describing the world (except for that pesky collapse problem).

Meanwhile, many of the problems of ontology are necissarily not open to empirical inquiry, and it's hard to see how science can move the needle on them much. It's a very blurry difference, I will agree, but you need some sort of distinction to give science any definition at all. -

Is materialism unscientific?I don't think most ontological claims are possible to vet empircally, so they can't be scientific. That said, science often informs our ontology and sometimes ontologies do make claims that science may be able to support or undermine.

With that in mind, parts of any ontology can be scientific. For example, the rise of information based ontologies comes from insights in quantum mechanics and the physics of how information is stored, particularly in black holes. If parts of the holographic principal are undermined by later discoveries in physics, it would have implications for these ontologies. I'm not even sure what to call information ontologies. They have more in common with physicalism than anything else, but seem distinct enough from them to warrant their own lable.

I feel like the creation of fully sentient behaving AI, and fully immersive virtual reality (probably involving some sort of direct stimulation of the brain) would be a boost to the credibility of physicalism in many ways, but, because some of the issues involved don't lend themselves to scientific analysis, there will still always be gaps reasonable people can disagree on when favoring one ontology over the other. -

Esse Est PercipiI don't think I'm reading my concerns into Berkeley at all. I'm not particularly amenable to Berkeley's overall system. I do think he hit on something very significant about the limits of knowledge though.

Re: Spirits vs Ideas

Ideas are objects of minds. They only exist as perception:

This perceiving, active being is what I call mind, spirit, soul, or myself. By which words I do not denote any one of my ideas, but a thing entirely distinct from them, wherein they exist, or, which is the same thing, whereby they are perceived; for the existence of an idea consists in being perceived.

Spirits

A Spirit is one simple, undivided, active Being: as it perceives Ideas, it is called the Understanding, and as it produces or otherwise operates about them, it is called the Will.

Hence there can be no Idea formed of a Soul or Spirit: For all Ideas whatever, being Passive and Inert, vide Sect. 25. they cannot represent unto us, by way of Image or Likeness, that which acts.

Now, the way he gets ideas to interact with spirits is certainly open to plenty of criticisms, but it doesn't fall victim to infinite regress, nor does it rely on a circle of self-perception per . -

Esse Est Percipi

Paradigms in science shift all the time, and then the previously accepted model gets rejected in the same manner. Luminiferous aether, Bowley's Law, etc. Super gravity gives way to super string theory which gives way to M theory. The world has three dimensions... until it has 11. Physical forces act locally, until instantaneous action at a distance shows up. Information in black holes vanishes forever, until it turns out it radiates out. Same thing because both are attempts to make inferences from experience in a systematic way. -

Esse Est Percipi

It's equally a problem for both as far as arguments for solipsism, being a brain in a vat, being mislead by Decartes' demon, etc. is concerned. The arguments against radical skepticism don't really depend on physicalism vs dualism vs idealism.

The point I was making was merely that, contrary to popular arguments, every critique based on the unreliability of perception made against idealism or dualism applies equally to physicalism. Like I said, there is also an argument to be made that idealism is simply more parsimonious, in that it doesn't have to posit that a set of abstractions that exists within thought are actually a description of what has ontic status.

In terms of explanatory power vis-á-vis the "Hard Problem of Conciousness," idealism has advantages over dualism and physicalism. Variants of substance dualism have to contend with the issues of how mind substance, which is totally different from physical substance, interact. It also struggles with why conciousness only shows up in organisms with complex nervous systems. After all, if mind is not based in matter, why shouldn't pens and cars be concious?

Type dualism gets around this issue with the claim that conciousness is a totally different type of thing, but that physical forces are still ontologically basic. I don't know if this event counts as what most people mean by dualism. Type and predicate dualism have always seemed eminently reasonable to me.

Type dualism basically has the same problem as physicalism: how can you explain how subjectivity arises from physical interactions? But at least here, type dualism has less of a problem because it claims that physics can't tell us why experience is what it is. Physicalism that rejects type dualism requires also explaining this last bit, and here it seems it may face insurmountable challenges. Because in physicalism where conciousness is not its own type, you are asking an set of abstractions, which are experienced as merely one element of mental life, to explain the qualitative experience of other elements satisfactorily. And this, I think, is why you get bonkers theories from this camp, namely the claim that quale don't exist.

IIRC, there's nothing in Berkeley's speculation that says 'to be is to be self-perceived'. And even if so, that's mere solipsism.

This is a fundamentally inaccurate reading of Berkeley. It only makes sense if you just look at the phrase "to be is to be perceived," out of context, and ignore his entire metaphysics. External objects are "ideas" in Berkeley; people are "spirits." Spirits are the things that are of themselves and do the perceiving. Unfortunately, he doesn't really develop how ideas are experienced by the mind in depth, but there is a sort of dualism between ideas and spirits. "To be is to be perceived," is explicitly about ideas, as Berkeley gets into to when he is refuting the idea that spirts are "ideas in the mind of God," or that people's spirits are a part of God. He sticks to Christian orthodoxy here, i.e., spirits (people) are ontic entities separate from God and created by God.

There is no infinite regress or solipsism in Berkeley even aside from the role of God. God's existence at the center of the ontology is also explicitly non-solipsistic. -

Esse Est Percipi

It's not a wild claim. We've known since the 1960s that only a very small amount of the processes in the brain make it to concious experience. R. Scott Bakker's Blind Brain Theory paper has a good summary of this and analyzes it from an eliminative materialist perspective.

We also know that the enviornment has way more information than organisms can absorb without succumbing to the entropy that threatens to overwhelm all self organizing systems.

For example, memories are not stored sensory data. The brain uses the same areas for memory that it uses to process new incoming sensory data. It creates memories and imagination anew each time. Most of our experience is the product of extrapolation from a small set of incoming data. For example, you don't experience your blind spot, the space is "filled in." So too you don't experience just how terrible peripheral vision is and its lack of color. All of that "filled in" experience is essentially a hallucination, the result of computational extrapolation. -

Esse Est PercipiBy the way, I consider myself a physicalist. I am just aware that the ontology has significant unresolved issues and undermines itself through trying to enforce dogmatic adherence to its precepts

-

Esse Est Percipi

My sentence doesn't negate that at all. Ask yourself, if no experiments could have shown evidence for electrons, would we say they exist? Why do we say the N Rays once proposed by science don't actually exist? Why do we no longer say luminous aether is the source of light?

In each case, it is because of observations. We thought we had observations of the aether, it turned out another theory explained our observations better. Sans observations, there is no science. The observations include the optical illusion, but only through observation can you ever tell that there is an optical illusion. So when you attack the credibility of observation, you're also attacking the credibility of arguments for physicalism and science as a whole. People don't see this connection because they are used to getting third person descriptions of the physical world as a story of facts, but these facts are all derived, at least in part, from observation, and confirmed by observation.

The point about the rose colored glasses is particularly apt. That IS the argument against physicalism. Just reframe it: "if you assume you have an abstract thought model that explains reality, and you interpret all experience using that model, does that mean your model is actually a reflection of reality?"

The rose colored glasses critique applies every bit as well to physicalism, it is just less clear because the latter is a complex system of overlapping abstractions, a "lens" for thinking. -

Esse Est Percipi

Yeah, it's debatable. He might be right though. There is a regular cottage industry of PhDs in the physical sciences presenting new, non-physicalist ontologies rooted in the findings of the physical sciences themselves. "It From Bit," is a popular one with many major variations, including simulation theory, or Tegmark's "the world is mathematics."

Notably, the most popular interpretation of physics among physicists, Copenhagen, leaves the question of "being without observation" unaddressed and in many formulations calls such questions "meaningless." Hardline, old-school 19th Century physicalism is alive and well in the wild, but dead in science. The new physicalism has non-local action, no objective world in some formulations, and a massive proliferation of unobservable dimensions in many others. -

Esse Est Percipi

He uses an analogy to multiple personality disorders. The universe is a mind, granted a very strange one. Objects in the universe are what they appear to be to us, and are ontologically based in mentation. So, while physicalism claims that the physical supervenes on all things observed, the corollary here is less of a positive claim. Things appear to us as mental objects, so why suppose they are something different?

Concious beings are minds disassociated from the surrounding mental substrate. The brain - behavior link is explained by the fact that brains are part of the extrinsic view of another mind. That is, neuroscience gives us a viewpoint of a mind from the viewpoint of another mind, in the same way that behaviorism is also a way of viewing other minds by how they represent in our minds.

It's funny that you say idealism is just extra steps. One of the main arguments in the book is that physicalism is the ontology with extra steps.

I have to agree with him here. Idealism is saying "things are what they appear to be." To be sure, our intuition about how things are is often wrong (optical illusions, the discovery of microbes), but it was observation, something that occurs in the mind, that told us all about bacteria, protons, quarks, etc.

Physicalism is saying, "no, actually what you experience isn't the real deal. You essentially hallucinate a world. The real stuff is the abstract model of the world we use to understand and predict observations. Yes this abstraction is only accessible as a component of thought, but it is actually ontologically basic."

It only appears simpler because it is accepted dogmatically and passed over outside graduate level science courses (and even not commonly then), and in philosophy. Ironically, the big source for new ontologies that compete with physicalism these days mostly come from physicists themselves. -

Esse Est Percipi

Yup. Now this fact is often put forth as a refutation of Berkeley, but it doesn't work unless you misunderstand his position.

This sort of epistemological problem is universal. Asserting an external world doesn't somehow erase the major quandaries in epistemology that have dogged us for millenia. You can be a physicalist and still be troubled that you can't prove you're not a brain in a vat. Physicalists, dualists, and idealists all have to concede that the universe, complete with all our memories and the evidence of its history, could have actually sprung into existence just 12 minutes ago, and we'd all be none the wiser. These problems aren't unique to idealism.

Idealism does not entail anti-realism. Berkeley thought rocks and chairs existed. They were just mental objects. Thus, idealism can work fine with science. Science is just the description of how phenomenal objects relate to one another. Its predictive power is in no way reduced in idealism.

Idealism also does not entail solipsism.

One of the weakest common counter arguments to idealism is: "if the world is mental, how come we can't will ourselves to fly, or will ourselves out of death." This never made sense to me. Can you will yourself to not feel sad when a loved one dies? Can you will yourself to remain perfectly calm at all times? Do you never get distracted or fall asleep without deciding too? Experience does not dictate that mental = controllable.

For a modern version of idealism, this book is quite good:

The attacks on physicalism are well organized and delivered very well, although they aren't particularly novel. The competing idealist ontology laid out is sort of "meh," though. It uses disassociative mental disorders as a key analogy and I don't know if it really works. It's also a little too hawkish on the findings for contextuality in quantum mechanics from what I understand, although he worked at CERN so he has more familiarity than I do I'm sure. He also sells information ontologies short and doesn't represent them very well. But, the reason I bring it up is because it shows an ontology based on modern science that avoids solipsism, is realist about external objects, and retains idealism. -

This Forum & Physicalism

This is not true at all. It is generally proposed in metaphysics, and supported by evidence, that a whole is greater than the sum of its parts. There is a logical fallacy, the composition fallacy, which results from what you propose.

I was speaking to identity in that post. The words "parts and wholes" is misleading there. When we say an object has the trait of being a triangle, or that it instantiates the universal of a triangle, we aren't referring to any one of its angles, right?

The concept of emergence and the composition fallacy doesn't apply to bundle theories of identity. A "trait" is not a stand in for a part of an object. For example, traits aren't parts in the sense that a liver is a part of a human body or a retina is part of an eye.

A trait - that is a trope (nominalism) or the instantiation of a universal (realism) - applies to the emergent whole of an object. They have to do so to serve their purpose in propositions. For example, the emergent triangularity of a triangle is a trait. The slopes of the lines that compose it are not traits, they are parts (they interact with traits only insomuch as they effect the traits of the whole). The way I wrote that was misleading, but the context is the identity of indiscernibles.

Traits are what allow propositions like "the bus is red," or "the ball is round" to have truth values. The sum total of an object's traits is not the sum total of its parts. It is the sum of all the predicates that can be attached to it. So an object that is "complex" but which is composed of "simple" parts still has the trait of being complex.

So to rephrase it better, the question is "is a thing defined by the sum of all the true propositions that can be made about it, or does it have an essential thisness of being unique to it?"

And this is nothing but nonsense. What could a "substratum of 'thisness'" possibly refer to? "

Yes, that is the common rebuttal I mentioned. It sounds like absurd gobbledygook. Now, its supporters claim that all ontologies assert ontologically basic brute facts, and so this assertion is no different, but it seems mighty ad hoc to me. That this theory still has legs is more evidence of the problems competitors face than its explicit merits.

You attempt to make the description, or the model, into the thing itself. But then all the various problems with the description, or model, where the model has inadequacies, are seen as issues within the thing itself, rather than issue with the description.

This sort of "maps versus territory" question begging accusation is incredibly common on this forum. It's ironic because in the context it is normally delivered, re: mental models of real noumena versus the real noumena in itself, it is itself begging the question by assuming realism.

As a realist, I still take the objection seriously, but I'm not totally sure how it applies here.

This is not really true. Time is a constraint in thermodynamics, but thermodynamics is clearly not the ground for time, because time is an unknown feature. We cannot even adequately determine whether time is variable or constant. I think it's important to understand that the principles of thermodynamics are applicable to systems, and systems are human constructs. Attempts to apply thermodynamic principles to assumed natural systems are fraught with problems involving the definition of "system", along with attributes like "open", "closed", etc..

The "thermodynamic arrow of time," refers to entropy vis-á-vis the universe as a whole. Wouldn't this be a non-arbitrary system.

I agree with the point on systems otherwise. I don't think I understand what "time is an unknown feature," means here. Is this like the "unknown features" of machine learning?